SERIES DRAFT: The Bad Space, heated discussions, and golden opportunities for the fediverse – and whatever comes next --

DRAFT! WORK IN PROGRESS!

PLEASE DO NOT SHARE WITHOUT PERMISSION!!!!!

Feedback welcome – via email to jon@achangeiscoming.net, or to @jdp23@blahaj.zone

I'm serializing this as multiple posts, staring with the "unsafe by design and unsafe by default" section. There are updates in the published posts that I'll reintegrate back into here. I also still need to do footnote cleanup (the bane of my existence) in the unpublished sections.

Contents

Part 1 – published as Mastodon and today’s fediverse are unsafe by design and unsafe by default – and instance blocking is a blunt but powerful safety tool

- Today's fediverse is unsafe by design and unsafe by default

- Instance-level federation choices are a blunt but powerful safety tool

- Instance-level federation decisions reflect norms, policies, and interpretations

Part 2 – published as Blocklists in the fediverse

- Enter blocklists

- Widely shared blocklists can lead to significant harm

- Blocklists potentially centralize power -- although can also counter other power-centralizing tendencies

- Today's fediverse relies on instance blocking and blocklists

- Steps towards better instance blocking and blocklists

Part 3 – published as It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds

- It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds

- The Bad Space and FSEP

- A bug leads to messy discussions, some of which are productive

- Nobody's perfect in situations like this

- These discussions aren't occurring in a vacuum

- Also: Black trans, queer, and non-binary people exist

Part 4

- Racialized disinformation and misinformation: a fediverse case study

Part 4 – published as Compare and contrast: Fediseer, FIRES, and The Bad Space

Part 5 – published as Steps towards a safer fediverse

- It's about people, not just the software and the protocol

- It's also about the software

- And it's about the protocol, too

- Threat modeling and privacy by design can play a big role here

- Design from the margins – and fund it!

Part 1

Intro

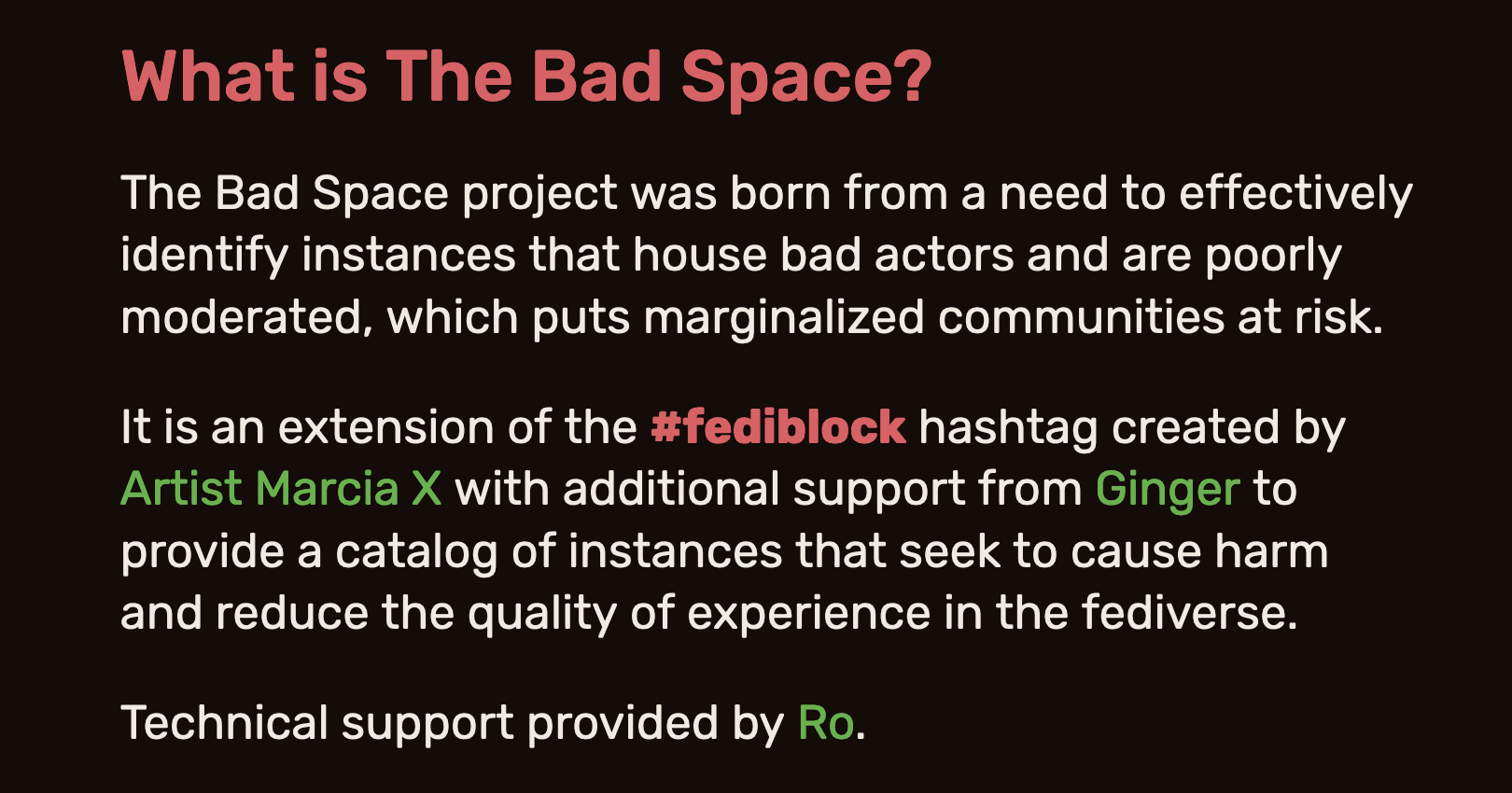

"The Bad Space project was born from a need to effectively identify instances that house bad actors and are poorly moderated, which puts marginalized communities at risk.

It is an extension of the #fediblock hashtag created by Artist Marcia X with additional support from Ginger to provide a catalog of instances that seek to cause harm and reduce the quality of experience in the fediverse.

Technical support provided by Ro."

– thebad.space/about, September 2023

The ecosystem of interconnected social networks known as the fediverse has great potential as an alternative to centralized corporate networks. With Elmo transforming Twitter into a machine for fascism and disinformation, and Facebook continuing to allow propaganda and hate speech to thrive while censoring support for Palestinians, the need for alternatives is more critical than ever.

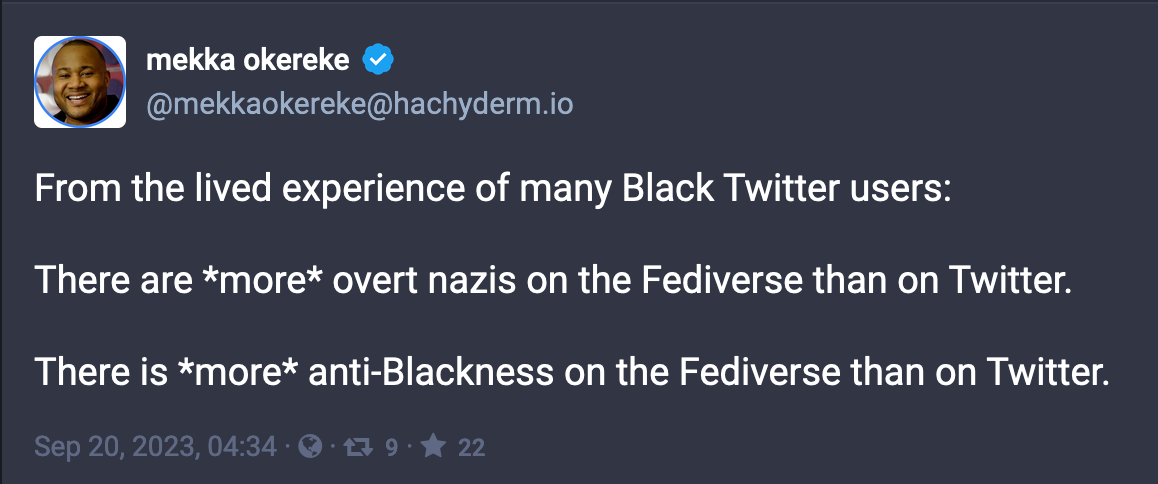

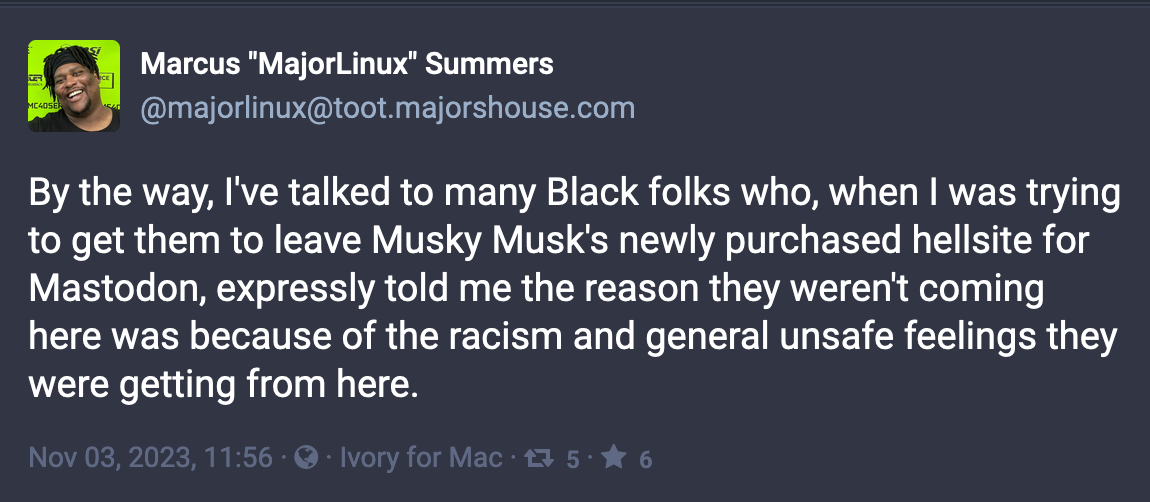

But even though millions of people left Twitter in 2023 – and millions more are ready to move as soon as there's a viable alternative – the fediverse isn't growing.1 One reason why: Today's fediverse is unsafe by design and unsafe by default – especially for Black and Indigenous people, women of color, LGBTAIQ2S+ people2, Muslims, disabled people and other marginalized communities.

"The fediverse is an ecosystem of abuse."

– Artist Marcia X, Ecosystems of Abuse, December 2022

The 1.35 million active users in today's fediverse are spread across more than 20,000 instances (aka servers) running a wide variety of software. On instances with active and skilled moderators and admins it can be a great experience. But not all instances are like that. Some are bad instances, filled with nazis, racists, misogynists, anti-LGBTAIQ2S+ haters, anti-Semites, Islamophobes, and/or harassers. And even on the vast majority of instances whose policies prohibit racism (etc.), relatively few of the moderators in today's fediverse have much experience with anti-racist or intersectional moderation – so very often racism (etc.) is ignored or tolerated when it inevitably happens.

Not only that, widely-adopted fediverse software platforms like Mastodon, Lemmy, et al, have historically prioritized connections and convenience over safety – and lack some of the basic tools that social networks like Twitter and Facebook give people to protect themselves.

Technologies like The Bad Space, designed with a focus on protecting marginalized communities, can play a big role in making the fediverse safer and more appealing for everybody (well except for harassers, racists, fascists, and terfs – but that's a good thing).

"After all, when your most at-risk and disenfranchised are covered by your product, we are all covered."

– Afsenah Rigot, Design From the Margins

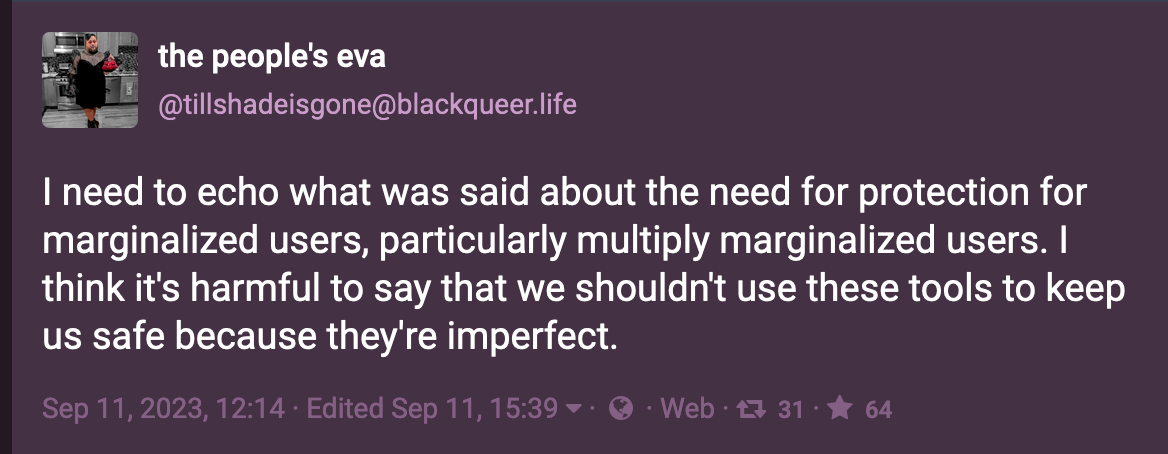

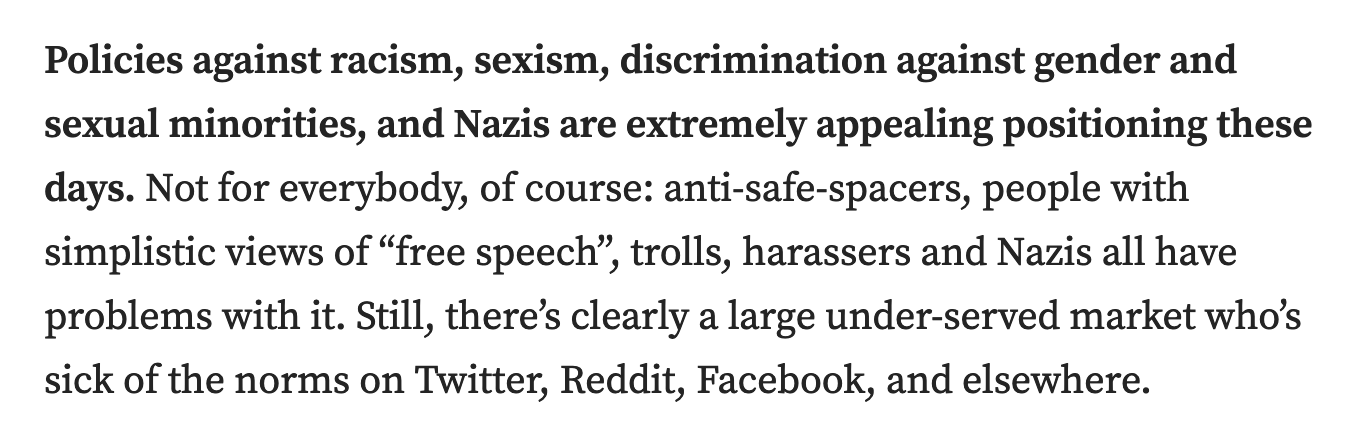

And taking an anti-racist, pro-LGBTAIQ2S+ approach is likely to be especially appealing to people who are are sick of the racist, anti-LGBTAIQ2S+ norms of other social networks.3

The Bad Space is still at an early stage. As the heated discussions over the last couple months about it and the Federation Safety Enhancement Project (FSEP) requirements document (authored by Roland X. Pulliam, aka Ro, and funded by the Nivenly Foundation), there are certainly areas for improvement – as well as grounds for concern about tools like blocklists (aka denylists) that today's fediverse relies on, including potential anti-trans biases. These are all topics that I'll explore, along with the opportunities, in more detail in later posts in this series.

Still, the initial functionality combined with the focus on helping marginalized people protect themselves is a promising start. And one important aspect of The Bad Space is that it's not just focused on a single software platform – or, in principle, even limited to the fediverse.4

Unfortunately, there's also a lot of resistance to this approach. The racist and anti-trans language and harassment during the heated discussions of The Bad Space over the last two months highlights the challenges.

- At the systemic level, technology that lets Black people protect themselves from racism is a threat to white supremacy; and an alliance between Black people (including Black queer, trans, and non-binary people) and anti-racist queer ant trans allies is doubly-threatening to cis white supremacy, which relies on preventing alliances like this.

- At the individual level, it’s threatening to racist white people (including racist white trans, queer, and non-binary) people – and to “non-racist”5 white people (including "non-racist" white trans, queer, and non-binary people) who don’t want to share power or impact their friendships with racist white people.6

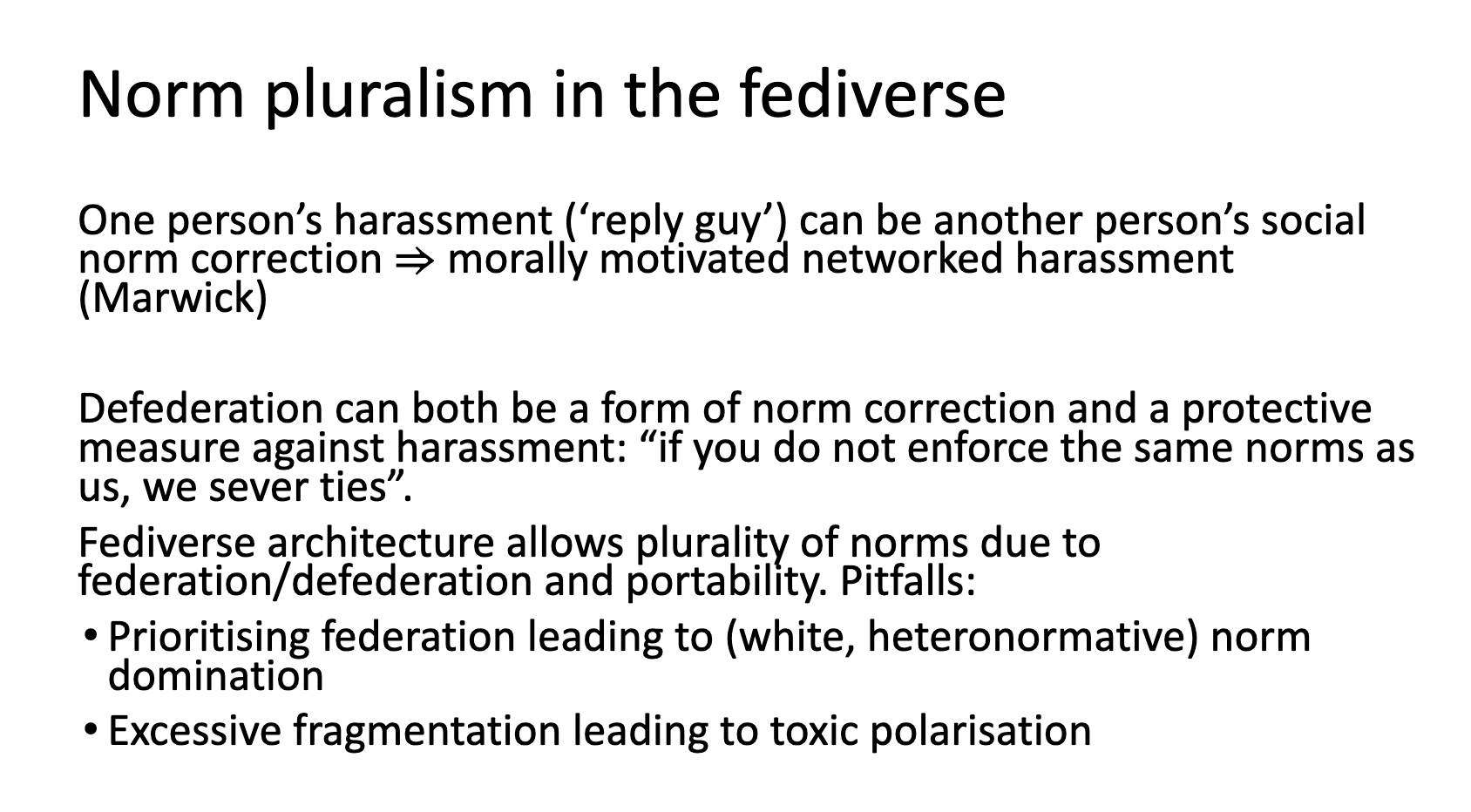

And philosophically, many of the most prominent developers and influencers in today's fediverse prioritize connectivity, convenience, and reach over safety and consent.

"[C]ommitments to safer spaces in some ways run counter to certain interpretations and norms of openness on which open source rests."

– Christina Dunbar-Hester, in Hacking Diversity

But despite the depressingly familiar racist dynamics,with echoes for so many people of past experiences of traumatic harassment and abuse on the fediverse or elsewhere, there are also some very encouraging differences – especially the increased visibility and power of Black and Indigenous people and allies, thanks to the influx from Twitter over the last year reinforcing the efforts of long-time fediversians. If the fediverse decides to to really start prioritizing the safety of marginalized communities, there are plenty of other opportunities for short-term progress – especially if fundipng materializes. For example:

- More resources going to new projects as well as Mastodon and Lemmy forks with diverse teams, led by marginalized people, and prioritizing safety of marginalized people

- More education for moderators and individual fedizens interested in creating a more anti-racist approach, sharing best practices and "positive deviance" examples

- Fediverse equivalents of valuable tools on other platform like Block Party 7 and FilterBuddy that have also been built in conjunction with people who are at risk from harassment.

Then again, like I said, there's a lot of resistance.

So it's quite possible that we're seeing a fork in the fediverse, with some regions of the fediverse moving in an anti-racist direction ... while others shrugging their collective shoulders, trying to maintain today's power dynamics, or moving in different directions. If so, then that's a good thing. Tindall's "Social Archipelago" of communities interacting (and not interacting) and forming "strands and islands and gulfs," Kat Marchán's caracoles (concentric federations of instances, combined with more intentional federation), and ophiocephalic's somewhat-similar fedifams are three possible geographies of how this could play out.8

"The existing “fediverse” is a two-edged sword for a social network with an anti-harassment and anti-racism focus."

– Lessons (so far) from Mastodon, 2017/8

And we're also likely to see new post-fediverses, potentially compatible (at least to some extent) with the fediverse and other networks but focusing on safety and anti-racism and developed by diverse teams working with diverse communities from the beginning.

"One way to look at today's fediverse is a prototype at scale, big enough to get experience with the complexities of federation, usable enough for many people that it's enjoyable for social network activities but with big holes including privacy and other aspects of safety, equity, accessibility, usability, sustainability and ...."

Today's fediverse is prototyping at scale

In any case there’s a lot of potential learning that’s relevant both for the fediverse and for whatever comes next.

So it's worth digging into the details of the situation: the fediverse's long-standing problems with abuse and harassment, the strengths and weaknesses of current tools; the approaches tools like The Bad Space, Fediseer, and FSEP take; and how the fediverse as a whole can seize the moment and build on the progress that's being made.

There’s a lot to talk about!

Today's fediverse is unsafe by design and unsafe by default

The most obvious source of anti-Blackness and other forms of identity-based hate speech and harassment in today's fediverse is the hundreds of bad instances run by nazis, other white supremacists, channers, or trolls who allow and even encourage -based hate speech and other forms of harassment.

This isn't new. In a 2022 discussion, Lady described GNU Social's late-2016 culture as "a bunch of channer shit and blatantly anti-gay and anti-trans memes", and notes that "the instance we saw most often on the federated timeline in those days was shitposter.club, a place which virtually every respectable instance now has blocked." clacke agrees that "the famous channer-culture and freezepeach instances came up only months before Mastodon's "Show HN"" in 2016. Creatrix Tiara's August 2018 Twitter thread discusses a 2017 racist dogpiling led by an instance hosted by an alt-right podcaster.

But just like Nazis and card-carrying TERFs aren't the only source of racism and transhpobia and other bigotry in society, bad instances are far from the only source of racism, transphobia, and other bigotry in the fediverse. Some instances – including some of the largest, like pawoo.net – are essentially unmoderated. Others have moderators who don't particularly care about moderating from an anti-racist and pro-LGBTAIQ2S+ perspective. And even on instances where moderators are trying do the right thing, most don't have the training or experience needed to do effective intersectional moderation. So finding ways to deal with bad instances is necessary, but by no means sufficient.

This also isn't new. Dogpiling, weaponized content warning discourse, and a fig leaf for mundane white supremacy looks at the state of Mastodon in early 2017, and quotes to Margaret KIBI's The Beginnings description of how of content warnings (CWs) were "weaponized" when "white, trans users—who, for the record, posted un‐CWed trans shit in their timeline all the time—started taking it to the mentions of people of colour whenever the subject of race came up." Creatrix Tiara's November 2022 Twitter thread has plenty of examples from BIPOC fediversians about similar racialized CW discourse.

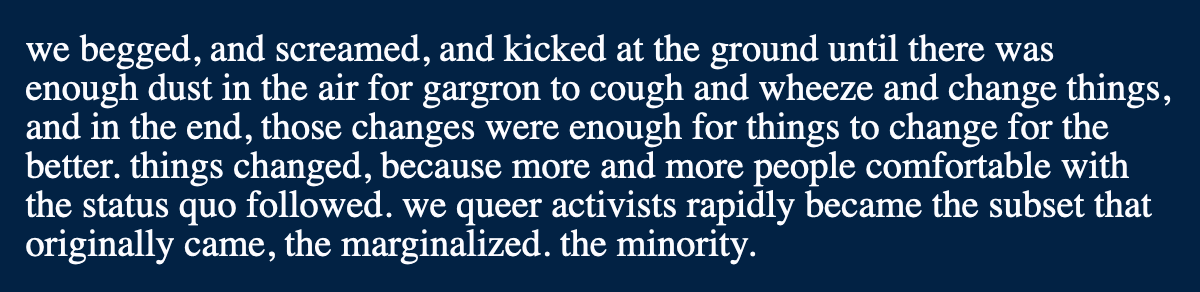

"we made this space our own through months of work and a fuckton of drama and infighting. but we did a good enough job that when mastodon took off, OUR culture was the one everybody associated it with"

– Lady, November 2022

In Mastodon's early days of 2016-2017, queer and trans community members drove improvements to the software and developed tools which – while very imperfect – help individuals and instance admins and moderators address these problem, at least to some extent. Unfortunately, as I discuss in The Battle of the Welcome Modal, A breaking point for the queer community, The patterns continue ..., and Ongoing contributions – often without credit, hostile responses from Mastodon's Benevolent Dictator for Life (BDFL) Eugen Rochko (aka Gargron) and a pattern of failing to credit people for their work drove many key contributors away.

Others remain active – and forks like glitch-soc continue to provide additional tools for people to protect themselves – but Mastodon's pace of innovation had slowed dramatically by 2018.9 Other software platforms like Akkoma, Streams, and Bonfire have some much more powerful tools ... but over 80% of the active users in today's fediverse are on instances running Mastodon. Worse, some of the newer platforms like Lemmy (a federated reddit alternative) have even fewer tools than Mastodon.

Not only that, some of the protections that Mastodon provides aren't turned on by default – or are only available in forks, not the official release. For example:

- while Mastodon does offer the ability to ignore private messages from people who you aren't following – great for cutting down on harassment as well as spam – that's not the default. Instead, by default your inbox is open to nazis, spammers, and everybody else until you've found and updated the appropriate setting on one of the many settings screens.10

- by default blocking on Mastodon isn't particularly effective unless the instance admin has turned on a configuration option11

- by default all follow requests are automatically approved, unless you've found and updated the appropriate setting on one of the many settings screens.

- local-only posts (originally developed by the glitch-soc fork in 2017 and also implemented in Hometown and other forks) give people the ability to prevent their posts from shared with other instances (who might have harassers, terfs, nazis, and/or admins or software that doesn't respect privacy) ... but Rochko has refused to include this functionality in the main Mastodon release.12

- Mastodon supports "allow-list" federation,13 allowing admins to choose whether or not to agree federate with nazi instances; but Mastodon's documentation describes this as "contrary to Mastodon’s mission of decentralization", so by default, all federation requests are accepted.

And the underlying ActivityPub protocol the fediverse is built on doesn't design in safety.

"Unfortunately from a security and social threat perspective, the way ActivityPub is currently rolled out is under-prepared to protect its users."

– OcapPub: Towards networks of consent, Christine Lemmer-Webber

"The basics of ActivityPub are that, to send you something, a personPOSTs a message to your Inbox.

This raises the obvious question: Who can do that? And, well, the default answer is anybody."

– Erin Shepherd, in A better moderation system is possible for the social web, November 2022

Despite these problems, many people on well-moderated instances have very positive experiences in today's fediverse. Especially for small-to-medium-size instances, for experienced moderators even Mastodon's tools can be good enough.

However, many instances aren't well-moderated. So many people have very negative experiences in today's fediverse. For example ...

"During the big waves of Twitter-to-Mastodon migrations, tons of people joined little local servers ... and were instantly overwhelmed with gore and identity-based hate."

– Erin Kissane, Blue skies over Mastodon (May 2023)

"It took me eight hours to get a pile of racist vitriol in response to some critiques of Mastodon."

– Dr. Johnathan Flowers, The Whiteness of Mastodon (December 2022)

"I truly wish #Mastodon did not bomb it with BIPOC during the Twitter migration.... I can't even invite people here b/c of what they have experienced or heard about others experiencing."

– Damon Outlaw, May 2023

Instance-level federation choices are a blunt but powerful safety tool

"Instance-level federation choices are an important tool for sites that want to create a safer environment (although need to be complemented by user-level control and other functionality)."

– Lessons (so far) from Mastodon, originally written May 2017

For people who do have good experience, one of the key reasons is a powerful tool Mastodon first developed back in 2017: instance-level blocking, the ability to

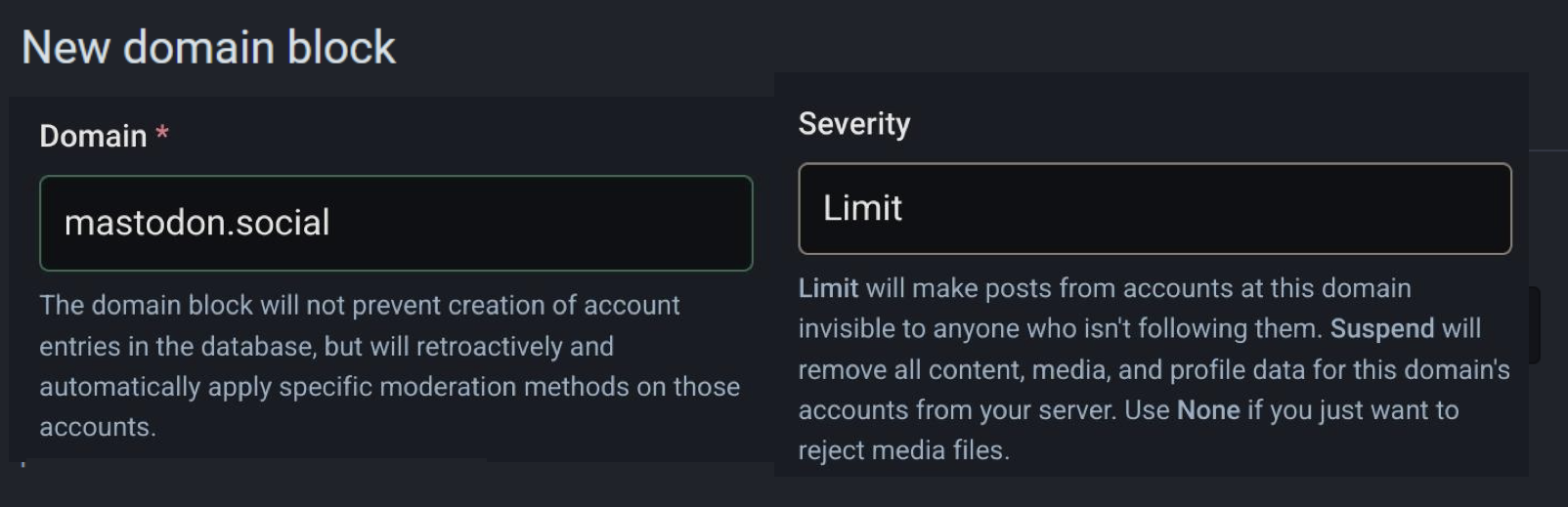

- defederate (aka suspend) another instance, preventing future communications and removing all existing connections between accounts on the two instances

- limit (aka silence) another instance, limiting the visibility of posts and notifications from that instance to some extent (although not completely) unless people are following the account that makes them.

Terminology note: yes, it's confusing. Different people use different terms for similar things, and sometimes the same term for different things ... and the "official" terminology has changed over the years. See the soon-to-be-written "Terminology" section at the end for details.

Of course, instance-level blocking is a very blunt tool, and can have significant costs as well. Mastodon unhelpfully magnifies the costs by not giving an option to restore any connections that are severed by defederation,14 not letting people know when they've lost connections because of defederation, and making the experience of moving between instances unpleasant and awkward.

Opinions differ on how to balance the costs and benefits, especially in situations where are some bad actors on an instance as well as also lots of people who aren't bad actors.

Instance blocking decisions often spark heated discussions. For example, suppose an instance's admin makes a series of jokes that some people consider perfectly fine but others consider racist, and then verbally attacks people who report the posts or call them out. Others on their instance join in as well, defending somebody they see as unfairly accused, and in the process make some comments that they think are just fine but others consider racist. When those comments are reported, the moderators (who thinks they're just fine) doesn't take action. Depending on how you look at it, it's either

- a pattern of racist behavior by the admin and members of the instance, failure to moderate racist posts, and brigading by the members of the instance,

- or a pattern of false accusations and malicious reporting from people on other instances

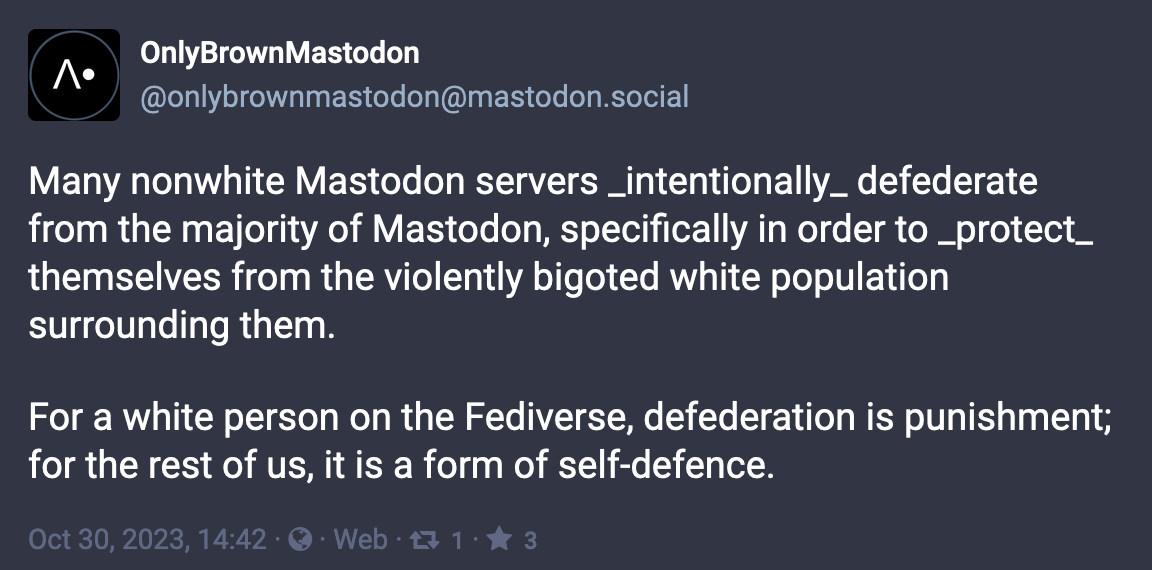

Is defederation (or limiting) appropriate? If so, who should be defederated or limited – the instance with the comments that some find racist, or the instances reporting comments as racist that others think are just fine? Unsurprisingly, opinions differ. In situations like this, white people are more likely to be forgiving of the posts and behavior that people of color see as racist – and, more likely to see any resulting defederation or limiting as a punishment.

I'll delve more into the other scenarios where opinions differ on whether defederation or limiting is or isn't appropriate in the next session. First though I really want to emphasize the value of instance-level blocking.

- Defederating from a few hundred "worst-of-the-worst" instances makes a huge difference.

- Defederating or limiting large loosely-moderated instances (like the "flagship" mastodon.social) that are frequent sources of racism, misogyny, and transmisia2 means even less harassment and bigotry (as well as less spam).

- Mass defederation can also send a powerful message. When far-right social network Gab started using Mastodon software in 2019, most fediverse instances swiftly defederated from it. Bye!!!!!!!

Instance-level blocking really is a very powerful tool.

1 As I said a few months ago describing an incident where an admin defederated an instance and then on further reflection decided it had been an overreaction,

"[A]fter six years why wasn't there an option of defederating in a way that allows connections to be reestablished when the situation changes and refederation is possible? If you look in inga-lovinde 's Improve defederation UX March 2021 feature request on Github, it's pretty clear that it's not the first time stuff like this happened."

And it wasn't the last time stuff like this happened either. In mid-October, a tech.lgbt moderator decided to briefly suspend and unsuspend connections to servers that had been critical of tech.lgbt, in hopes that it would "break the tension and hostility the team had seen between these connections." Oops. As the tech.lgbt moderators commented afterwards, "severing connections is NOT a way to break hostility in threads and DMs."

2 transmisia – hate for trans people – is increasingly used as an alternative to transphobia.

Instance-level federation decisions reflect norms, policies, and interpretations

For some instances, defederating from Gab was based on norms: we don't tolerate white supremacists, Gab embodies white supremacy, so we want nothing to do with them. For others, it was more a matter of safety: defederating from Gab cuts down on harassment. And for some, it was both.

Even when there's apparent agreement on a norm, interpretations are likely to differ. For example, there's wide agreement on the fediverse that anti-Semitism is bad. But what happens when somebody makes a post about the situation in Gaza that Zionist Jews see as anti-Semitic and anti-Zionist Jews don’t? If the moderators don’t take the posts down, are they being anti-Semitic? Conversely, if the moderators do take them down, are they being anti-Palestinian? Is defederation (or limiting) appropriate – or is calling for defederation anti-Semitic? To me, as an anti-Zionist Jew, the answers seem clear;16 once again, though, opinions differ.

And (at the risk of sounding like a broken record) in many situations, moderators – or people discussing moderator decisions – don't have the knowledge to understand why something is racist. Consider this example, from @futurebird@sauropod.win's excellent Mastodon Moderation Puzzles.

"You get 4 reports from users who all seem to be friends all pointing to a series of posts where the account is having an argument with one of the 4 reporters. The conversation is hostile, but contains no obvious slurs. The 4 reports say that the poster was being very racist, but it's not obvious to you how."

As a mod what do you do?

I saw a spectacular example of this several months ago, with a series of posts from white people questioning an Indigenous person's identity, culture, and lived experiences. Even though it didn't include slurs, multiple Indigenous people described it as racist ... but the original posters, and many other white people who defended them, didn't see it that way. The posts eventually got taken down, but even today I see other white people characterizing the descriptions of racism as defamatory.

So discussions about whether defederation (or limiting) is appropriate often become contentious in situations when ...

- an instance's moderators frequently don't take action when racist, misogynistic, anti-LGBTQ+, anti-Semitic, anti-Muslim, or casteist posts are reported.

- an instance's moderators frequently only take action after significant pressure and a long delay when racist, misogynistic, anti-LGBTQ+, anti-Semitic, anti-Muslim, or casteist posts are reported.

- an instance hosts a known racist, misogynistic, or anti-LGBTQ+ harasser

- an instance's admin or moderator is engaging in – or has a history of engaging in – harassment

- an instance's admin or moderator has a history of anti-Black, anti-Indigenous, or anti-trans activity

- an instance's members repeatedly make false accusations that somebody is racist or anti-trans

- an instance's members try to suppress discussions of racist or anti-trans behavior by brigading people who bring the topics up or spamming the #FediBlock hashtag

- an instance's moderators retaliate against people who report racist or anti-trans posts

- an instance's moderator, from a marginalized background, is accused of having a history of sexual assult – but claims that it's a false accusation, based on a case of mistaken identity

- an instance's members don't always put content warnings (CWs) on posts with sexual images for content from their everyday lives17

Similarly, there's often debate about if and when it's approprate to re-federate. What if an instance has been defederated because of concerns that an admin or moderator is a harasser who can't be trusted, and then the person steps down? Or suppose an multiple admittedly-mistaken decisions by an instance's moderators that impacted other instances leads to them being silenced, but then a problematic moderator leaves the instance and they work to improve their processes. At what point does it make sense to unsilence them? What if it turns out the processes haven't improved, and/or more mistakes get made?

Transitive defederation – defederating from all the instances that federate with a toxic instance – is particularly controversial. Is it grounds for defederation if an instance federates with a white supremacist instance like Stormfront or Gab, or an anti-trans hate instance like kiwiframs? Many see federating with an instance that tolerates white supremacists as tolerating white supremacists, others don’t – and others agree that it’s tolerating white supremacists but don’t see that as grounds for defederation. What about if an instance federates with channer shiposting instances?

Norm-based transitive defederation can be especially contentious, but there can also be disagreements about safety-based transitive defederation. In Why just blocking Meta’s Threads won’t be enough to protect your privacy once they join the fediverse, for example, I describe how indirect data flows could leave people at risk without transitive defederation, but opinions differ on whether this is a severe enough safety risk to justify what some see as the "nuclear option" of transitive defederation.

Part 2

Enter blocklists

With 20,000 instances in the fediverse, how to know which ones are bad actors that should be defederated or so loosely moderated that they should be limited?

Back in 2017, Artist Marcia X created the #FediBlock hashtag, and Ginger helped spread it via faer networks, to make it easier for admins and users to share information about instances spreading harassment and hate – instances that admins may want to defederate or limit.

#FediBlock continues to be a useful channel for sharing this information, although it has its limits. For one thing, anybody can post to a hashtag, so without knowing the reputation of the person making a post to the hashtag it's hard to know how much credibility to give the recommendation. And Mastodon's search functionality has historically been very weak, so there's no easy way to search the #FediBlock hashtag to see whether specific instances have been mentioned. Multiple attempts to provide collections of #FediBlock references (without involving or crediting its creators) have been abandoned; without curation, a collection isn't particularly useful – and can easily be subverted.

Long-time admins know about a few low-profile well-curated sites that record some vetted #FediBlock entries, and Google and other search engines have partial information, but it's all very time-consuming and hit-or-miss. And with hundreds of problematic instances out there, blocking them individually can be tedious and error-prone – and new admins often don't know to do it.

Starting in early 2023, Mastodon began providing the ability for admins to protect themselves from hundreds of problematic instances at a time by uploading blocklists (aka denylists): lists of instances to suspend or limit.

Terminology note: blocklist or denylist is preferred to the older blacklist and whitelist, which embed the racialized assumption that black is bad and white is good. Blocklist is more common today, but close enough to blacklist that denylist is gaining popularity.

As of late 2023, many instances make their blocklists available for others to upload, and blocklists from Seirdy (Rohan Kumar), Gardenfence, and Oliphant all have significant adoption. Here's how Seirdy's My Fediverse blocklists: describes the 140+ instances on his FediNuke.txt blocklist:

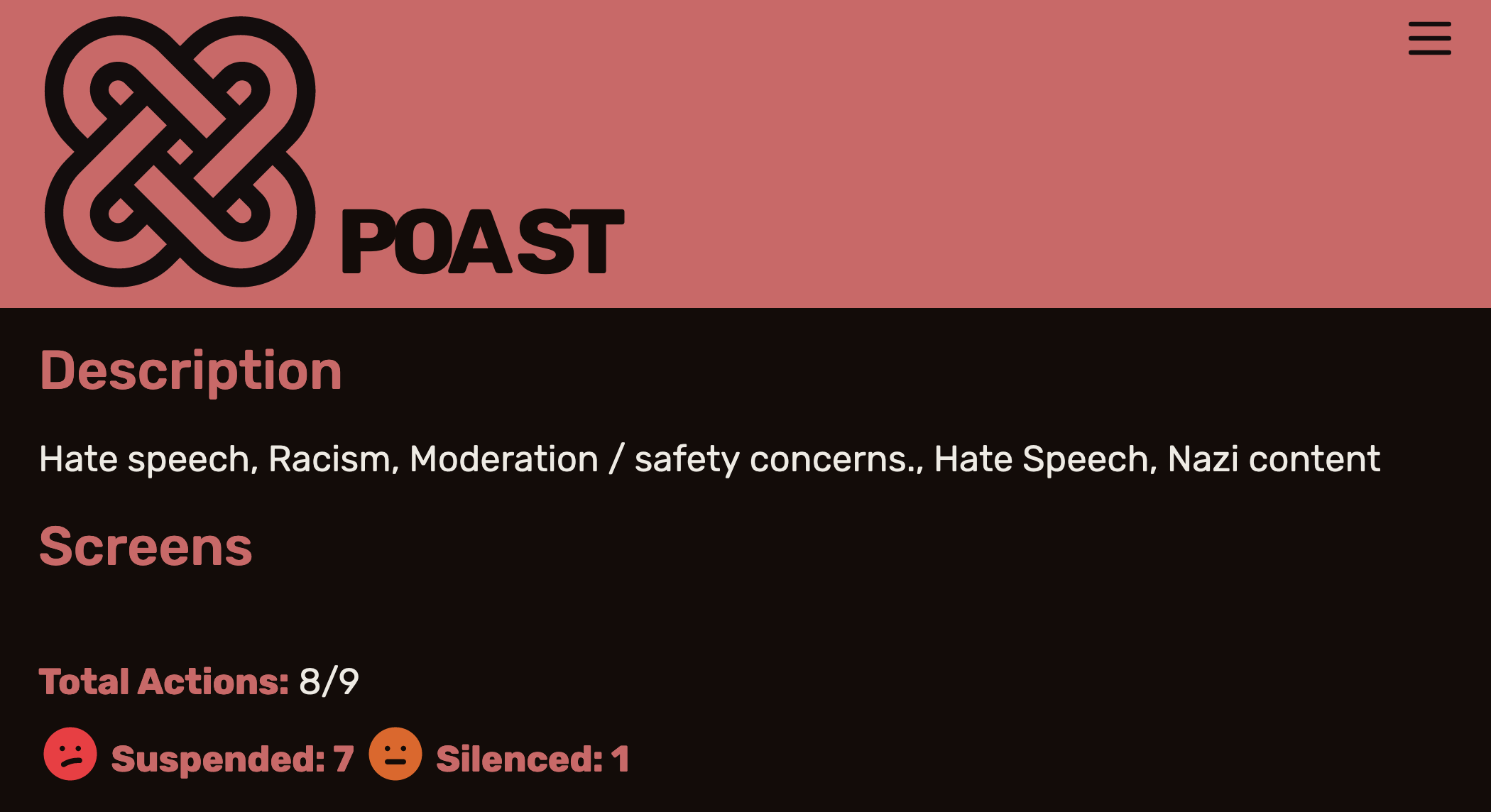

"It’s kind of hard to overlook how shitty each instance on the FediNuke.txt subset is. Common themes tend to be repeated unwelcome sui-bait2 from instance staff against individuals, creating or spreading dox materials against other users, unapologetic bigotry, uncensored shock content, and a complete lack of moderation."Seirdy's Receipts section gives plenty of examples of just how shitty these instances are. For froth.zone, for example, Seirdy has links including "Blatant racism, racist homophobia", and notes that "Reporting is unlikely to help given the lack of rules against this, some ableism from the admin and some racism from the admin." Seirdy also links to another instance's about page which boasts that "racial pejoratives, NSFW images & videos, insensitivity and contempt toward differences in sexual orientation and gender identification, and so-called “cyberbullying” are all commonplace on this instance." Nice.

Of course, blocklists aren't limited to shitty instances. Oliphant's git blocklists page highlight the range of approaches. Oliphant's "tier 0 council" (which he recommends as a "bare minimum" blocklist for new servers) has 240 instances, Seirdy's Tier 0 (which he describes as "a decent place to start" if you’re starting a well-moderated instance) has 400+, and Oliphant's "tier 3" has over 1000 instances.

The Oliphant and Gardenfence blocklists are based on combining the results of multiple instances' blocklists, with some threshold for inclusion on the "consensus" (or "aggregate") blocklist; Seirdy similarly uses a combination of multiple blocklists as the first step in his process. On the one hand, a purely algorithmic approach reduces the direct impact of bias and mistakes by the person or organization providing the blocklist. Then again, it introduces the possibility of mistakes or bias by one or more of the sources, selecting which sources to use, or the algorithm combining the various blocklists. Seirdy's Mistakes made analysis documents a challenge with this kind of approach.

"One of Oliphant’s sources was a single-user instance with many blocks made for personal reasons: the admin was uncomfortable with topics related to sex and romance. Blocking for personal reasons on a personal instance is totally fine, but those blocks shouldn’t make their way onto a list intended for others to use....

Tyr from pettingzoo.co raised important issues in a thread after noticing his instance’s inclusion in the unified-max blocklist. He pointed out that offering a unified-max list containing these blocks is a form of homophobia: it risks hurting sex-positive queer spaces."

Requiring more agreement between the sources reduces the impact of bias from any individual source, although also increases the number of block-worthy instances that aren't on the list. Gardenfence, for example, requires agreement of six of its seven sources, and has 136 entries; as the documentation notes, "there are surely other servers that you may wish to block that are not listed." As Seirdy notes, no matter what blocklist your starting with

"if your instance grows larger (or if you intend to grow): you should be intentional about your moderation decisions, present and past. Your members ostensibly trust you, but not me."

Any blocklist, for an individual instance intended to be shared between instances, reflects the creators' perspectives on this and dozens of other ways in which there isn't any fediverse-wide agreements on when it's appropriate to defederate or silence other instances. Since any blocklist by definition takes a position on all these issues, any blocklist is likely to be supported by those who have similar perspectives as the creators – and vehemently opposed by those who see things differently.

And some people vehemently oppose the entire of idea of blocklists, either on philosophical grounds or because of the risks of harms and abuse of power.

Widely shared blocklists can lead to significant harm

Blocklists have a long history. Usenet killfiles have existed since the 1980s, email DNSBLs since the late 90s, and Twitter users created The Block Bot in 2012. The perspective of a Block Bot user who Saughan Jhaver et al quote in Online harassment and content moderation: The case of blocklists (2018) is a good example of the value blocklists can provide to marginalized people:

"I certainly had a lot of transgender people say they wouldn’t be on the platform if they didn’t have The Block Bot blocking groups like trans-exclusionary radical feminists –TERFs.”

That said, widely-shared blocklists introduce risks of major harms – harms that are especially likely to fall on already-marginalized communities.

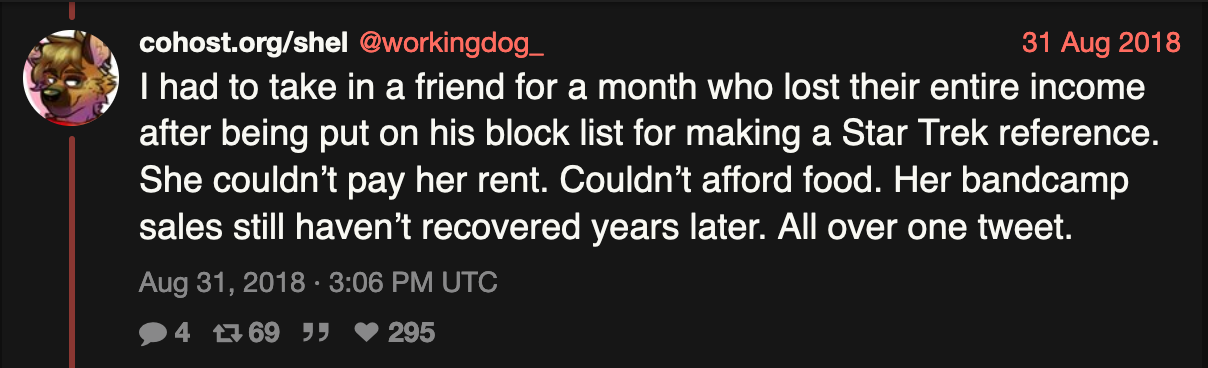

workingdog_'s thread from 2018 (written during the Battle of Wil Wheaton) describes a situation involving a different Twitter blocklist that many current Mastodonians had first-hand experience with: a cis white person arbitrarily put many trans people who had done nothing wrong on his widely-adopted blocklist of "most abusive Twitter scum" and as a result ...

"Tons of independent trans artists who earn their primary income off selling music on bandcamp, Patreon, selling art commissions, etc. got added to this block list and saw their income drop to 0. Because they made a Star Trek reference at a celebrity. Or for doing nothing at all.

Email blocklists have seen similar abuses; RFC 6471: Overview of Best Email DNS-Based List (DNSBL) Operational Practices (2012) notes that

“some DNSBL operators have been known to include "spite listings" in the lists they administer -- listings of IP addresses or domain names associated with someone who has insulted them, rather than actually violating technical criteria for inclusion in the list.”

And intentional abuse isn’t the only potential issue. RFC 6471 mentions a scenario where making a certain mistake on a DNSBL could lead to everybody using the list rejecting all email ... and mentions in passing that this is something that's actually happened. Oops.

"The trust one must place in the creator of a blocklist is enormous; the most dangerous failure mode isn’t that it doesn’t block who it says it does, but that it blocks who it says it doesn’t and they just disappear."

– Erin Sheperd, A better moderation system is possible for the social web

"Blocklists can be done carefully and accountably! But almost none of them ARE."

– Adrienne, a veteran of The Block Bot

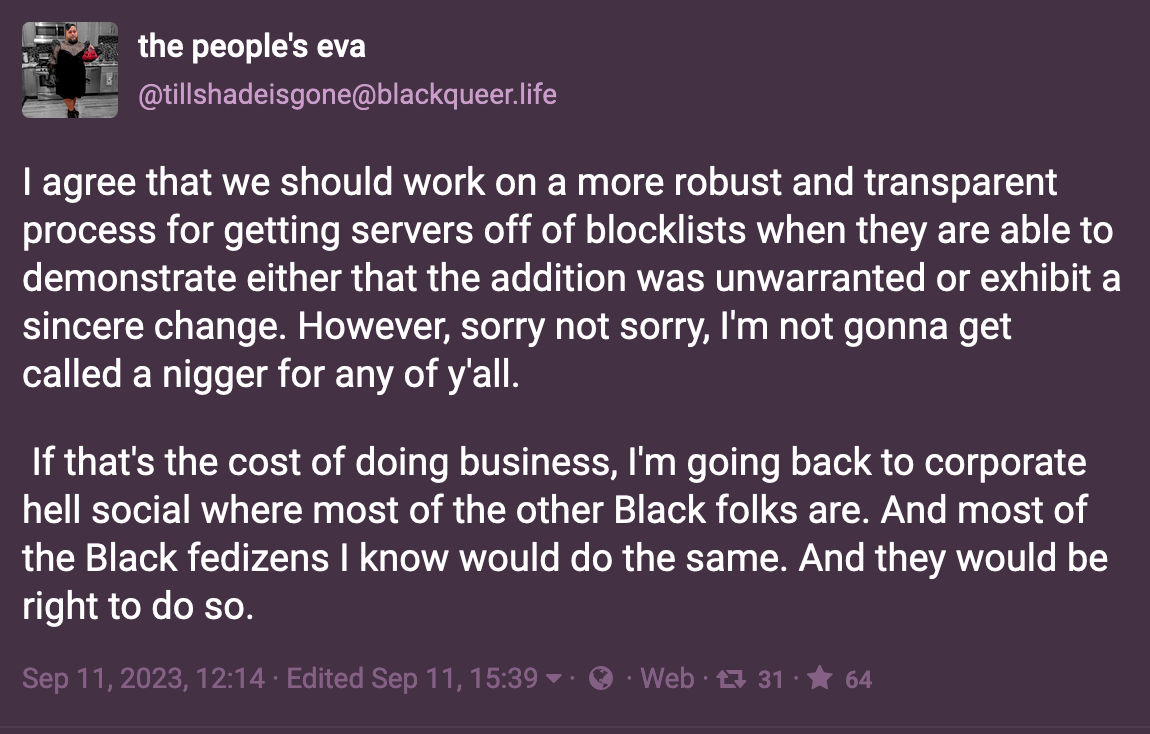

People involved in creating and using blocklists can take steps to limit the harms. An oversight and review process can reduce the risk of instances getting placed on a blocklist by mistake – or due to the creators' biases. Appeals processes can help deal with the situation when they almost-inevitably do. Sometimes problematic instances clean up their act, so blocklists need to have a process for being updated. Admins considering using a blocklist can double-check the entries to make sure they’re accurate and aligned with their instances’ norms. The upcoming section on steps towards better blocklists disucsses these and other improvements in more detail.

However, today's fediverse blocklists often have very informal review and appeals processes – basically relying on instances who have been blocked by mistake (or people with friends on those instances) to surface problems and kick off discussions by private messages or email. Some, like Seirdy's FediNuke, make it easier for admins to double-check them by including reasons and receipts (links or screenshots documenting specific incidents) for why instances appear on them. Others don't, or have only vague explanations ("poor moderation") for most instances.

To be clear, receipts aren't a panacea. In some situations, providing receipts can open up the blocklist maintainer (or people who had reported problems or previously been targeted) to additional harassment or legal risk. Some of the incidents that lead to instances being blocked can be quite complex, so receipts are likely to be incomplete at best. And even when receipts exist, it’s likely to take an admin a long time to check all the entries on a blocklist – and there are still likely to be disagreements about how receipts should be interpreted. Still, today's fediverse blocklists certainly ample room for improvement on this front – and many others.

Blocklists potentially centralize power – although can also counter other power-centralizing tendencies

Another big concern about widely-adopted blocklists is their tendency to centralize power. Imagine a hypothetical situation where every fediverse instance had one of a small number of blocklists that had been approved by a central authority. That would give so much power to the central authority and the blocklist curator(s) that it would almost certainly be a recipe for disaster.

And even in more less-extreme situations, blocklists can centralize power. In the email world, for example, blocklists have contributed to a situation where despite a decentralized protocol a very small number of email providers have over 90% of the installed base. You can certainly imagine large providers using a similar market-dominance technique in the fediverse. Even today, I've seen several people suggest that people should choose large instances like mastodon.social which many regard as "too big to block." And looking forward, what happens if and when Facebook parent company Meta's new Threads product adds fediverse integration?

Then again, blocklists can also counter other centralizing market-dominance tactics. Earlier this year, for example, Mastodon BDFL (Benevolent Dictator for Life) Rochko changed the onboarding of the official release to sign newcomers up by default to mastodon.social, the largest instance in the fediverse – a clear example of centralization, especially since Rochko is also CEO of Mastodon gGmbH, which runs mastodon.social. One of the justifications for this is that otherwise newcomers could wind up on an instance that doesn't block known actors and have a really horrible experience. Broad adoption of "worst-of-the-worst" blocklists would provide a decentralized way of addressing this concern.

For that matter, broader adoption of blocklists that silence or block mastodon.social could directly undercut Mastdon gGmbH's centralizing power in a way that was never tried (and is no longer feasible) with gmail. The FediPact – hundreds of instances agreeing to block the hell out of any Meta instances – is another obvious example of using a blocklist to counter dominance.

Today's fediverse relies on instance blocking and blocklists

It would be great if Mastodon and other fediverse software had other good tools for dealing with harassment and abuse to complement instance-level blocking – and, ideally, reduce the need for blocklists.

But it doesn't, at least not yet.

That needs to change, and in an upcoming installment I'll talk about some straightforward short-term improvements that could have big impact. Realistically, though, it's not going to change overnight – and a heck of a lot of people want alternatives to Twitter right now.

So despite the costs of instance-level blocking, and the potential harms of blocklists, they're the only currently-available solution for dealing with the hundreds of Nazi instances – and thousands of weakly-moderated instances, including some of the biggest, where moderators frequently don't take action on racist, anti-Semitic, anti-Muslim, etc content. As a result, today's fediverse is very reliant on them.

Steps towards better instance blocking and blocklists

"Notify users when relationships (follows, followers) are severed, due to a server block, display the list of impacted relationships, and have a button to restore them if the remote server is unblocked"

– Mastodon CTO Renaud Chaput, discussing the tentative roadmap for the upcoming Mastodon 4.3 release

Since the fediverse is likely to continue to rely on instance blocking and blocklists at least for a while, how to improve them? Mastodon 4.3's planned improvements to instance blocking are an important step. Improvements in the announcements feature (currently a "maybe" for 4.3) would also make it easier for admins to notify people about upcoming instance blocks. Hopefully other fediverse software will follow suit.

Another straightforward improvement along these lines would be an option to have new federation requests initially accepted in "limited" mode. By reducing exposure to racist content, this would likely reduce the need for blocking.

For blocklists themselves, one extremely important step is to let new instance admins know that they should consider initially blocking worst-of-the-worst instances (and their members are likely to get hit with a lot of abuse if they don't) and offering them some choices of blocklists to use as a starting point. This is especially important for friends-and-family instances which don't have paid admins or moderators. Hosting companies play a critical role here – especially if Mastodon, Lemmy, and other software platforms continue not to support this functionality directly (although obviously it would be better if they do!)

Since people from marginalized communities are likely to face the most harm from blocklist abuse, involvement of people from different marginalized communities in creating and reviewing blocklists is vital. One obvious short-term step is for blocklist curators – and instance admins whose blocklists are used as inputs to aggregated blocklists – to provide reasons instances are on the list, checking to see where receipts exist (and potentially providing access to them, at least in some circumstances), re-calibrating suspension vs. silencing in some cases, and so on. Independent reviews are likely to catch problems that a blocklist creator misses, and an audit trail of bias and accuracy reviews could make it much easier for instances to check whether a blocklist has known biases and mistakes before deploying it. Of course, this is a lot of work; asking marginalized people to do it as volunteers is relying on free labor, so who's going to pay for it?

A few other directions worth considering:

- More nuanced control over when to automatically apply blocklist updates could limit the damage from bugs or mistakes

- Providing tags for the different reasons that instances are on on blocklists could make it much easier for admins to use blocklists as a starting point, for example by distinguishing between instances that are on a blocklist for racist and anti-trans harassment from instances that are there only because of CW or bot policies that the blocklist curator considers overly lax.

- Providing some access to receipts and an attribution trail of who has independently decided an instance should be blocked help admins and independent reviewers make better judgments about which blocklist entries they agree with. As discussed above, receipts are a complicated topic, and in many situations may be only partial and/or not broadly shareable; but as Seirdy’s FediNuke.txt list shows, there are quite a few situations where they are likely to be available.

- Shifting to a view of a blocklist as a collection of advisories or recommendations, and providing tools for instances to better analyze them, could help mitigate harm in situations where biases do occur. Emelia Smith's work in progress on FIRES (Fediverse Intelligence Recommendations & Replication Endpoint Server) is a valuable step in this direction.

- Learning from experiences with email blocking and IP blocking – and, where possible, building on infrastructure that already exists

Algorithmic systems tend to magnify biases, so "consensus" blocklists require extra scrutiny. Algorithmic audits (a structured approach to detecting biases and inaccuracies) are one good way to reduce risks – although again, who's going to pay for it Adding elements of manual curation (by an intersectionally-diverse team of people from various marginalized perspectives) could also be helpful. Hrefna has some excellent suggestions as well, such as preprocessing inputs to add additional metadata and treating connected sources (for example blocklists from instances with shared moderators) as a single source. And there are a lot of algorithmic justice experts in the fediverse, so it's also worth exploring different anti-oppressive algorithms specifically designed to detect and reduce biases.

Of course, none of these approaches are panaceas, and they’ve all got complexities of their own. When trying to analyze a blocklist for bias against trans people, for example, there's no census of the demographics of instances in the fediverse, so it's not clear how to determine whether trans-led instances are overrepresented. The outsized role of large instances like mastodon.social that are sources of a lot of racism (etc) is another example; if a blocklist doesn't block mastodon.social, does that mean it's inherently biased against Black people? When looking at whether a blocklist is biased against Jews or Muslims, whose definitions of anti-Semitism get used? What about situations where differing norms (for example whether spamming #FediBlock as grounds for defederation, or whether certain jokes are racist or just good clean fun) disproportionately affect BIPOC and/or trans people?

Which brings us back to a point I made earlier:

"It would be great if Mastodon and other fediverse software had other good tools for dealing with harassment and abuse to complement instance-level blocking – and, ideally, reduce the need for blocklists."

Part 3

It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds

The problem The Bad Space is focusing on is certainly a critical one – as the widespread racist and anti-trans bigotry and harassment in the messy discussions of The Bad Space over the last few months highlight. And Ro's certainly got the right background to work on fediverse safety tools. As well as coding skills and years of experience in the fediverse, he and Artist Marcia X were admins of Play Vicious, which as one of the few Black-led instances in the fediverse was the target of vicious harassment until it shut down in 2020. And The Bad Space's approach of designing from the perspective of marginalized communities is a great path to creating a fediverse that's safer and more appealing for everybody (well except for harassers, racists, fascists, and terfs – but that's a good thing). As Afsenah Rigot says in Design From the Margins

"After all, when your most at-risk and disenfranchised are covered by your product, we are all covered."

The Bad Space is still at an early stage, and like all early-stage software has bugs and limitations. Many of the sites listed don't have descriptions; some of the descriptions may be out-of-date; there's no obvious appeals process for sites that think they shouldn't be listed. A mid-September bug led to some instances being listed by mistake, and the user interface at the time didn't provide any information about whether instances were limited (aka silenced) or suspended (aka defederated) by other instances. The bug's been fixed, the UI's been improved ... but of course there may well be other false positives, bugs, etc etc etc. It's software!

Still, The Bad Space is useful today, and has the potential to be the basis of other useful safety tools – for Mastodon and the rest of the fediverse,2 and potentially for other decentralized social networks as well. Many people did find ways to have productive discussions about The Bad Space without being racist or anti-trans, highlighting areas for improvement and potential future tools. So from that perspective quite a few people (including me) see it as off to a promising start.

Then again, opinions differ. For example, some of the posts I'll discuss in the next installment of this series (tentatively titled Racialized disinformation and misinformation: a fediverse case study) describe The Bad Space as "pure concentrated transphobia" that "people want to hardcode into new Mastodon installations" in a plot involving somebody who's very likely a "right wing troll" working with an "AI art foundation aiming to police Mastodon" as part of a "deliberate attempt to silence LGBTQ+ voices."

Alarming if true!

"[D]isinformation in the current age is highly sophisticated in terms of how effectively a kernel of truth can be twisted, exaggerated, and then used to amplify and spread lies."

– Shireen Mitchell of Stop Online Violence Against Women, in Disinformation: A Racist Tactic, from Slave Revolts to Elections

Another way to look at it ...

At the same time, the messy discussions around The Bad Space are also a case study of the resistance in today's fediverse to technology created by a Black person (working with a diverse team of collaborators that includes trans and queer people) that allows people to better protect themselves.3

Many people misleadingly describe reactions to The Bad Space in terms of tensions between trans people and Black people – and coincidentally enough that's how some of the racialized disinformation and misinformation I'll be writing about in the next installment frames it, too.4 That's unfortunate in many ways. For one thing, Black trans people exist. Also, the "Black vs. trans" framing ignores the differences between trans femmes, trans mascs, and agender people. And the alternate framing of tensions between white trans femmes and Black people is no better, erasing trans people who are neither white nor Black,5 multiracial trans people, white agender people, white trans mascs (and many others) while ignoring the impact of cis white people, colorism, ableism, etc etc etc.

Besides, none of these communities are monolithic. There's a range of opinion on The Bad Space in Black communities, trans communities, and different intersectional perspectives (white trans femmes, disabled trans people) – a continuum between some people helping create it and/or actively supporting it, others strongly opposing it, with many somewhere in between.

More positively though, these dynamics – and the racist and anti-trans language in the discussions about The Bad Space – also make it a good case study of how cis white supremacy creates wedges (and the appearance of wedges) between and within marginalized groups – and erases people at the intersections and falling through. And with luck it'll also turn out to be a larger case study about how anti-racist and pro-LGBTAIQ2S+ people work together as part of an intersectional coalition to create something that's very different from today's fediverse.

The fediverse's technology problems do need to be fixed, and I'll return to that in an upcoming installment in this series. But if the fediverse – or a fork of today's fediverse – is going to move forward in an anti-racist and pro-LGBTAIQ2S+ direction, understanding these dynamics are vital. And whether or not the fediverse moves forward and fixes its technology problems ... well, understanding these dynamics is vital for whatever comes next, because these same problems occur on every social network platform.

The Bad Space and FSEP

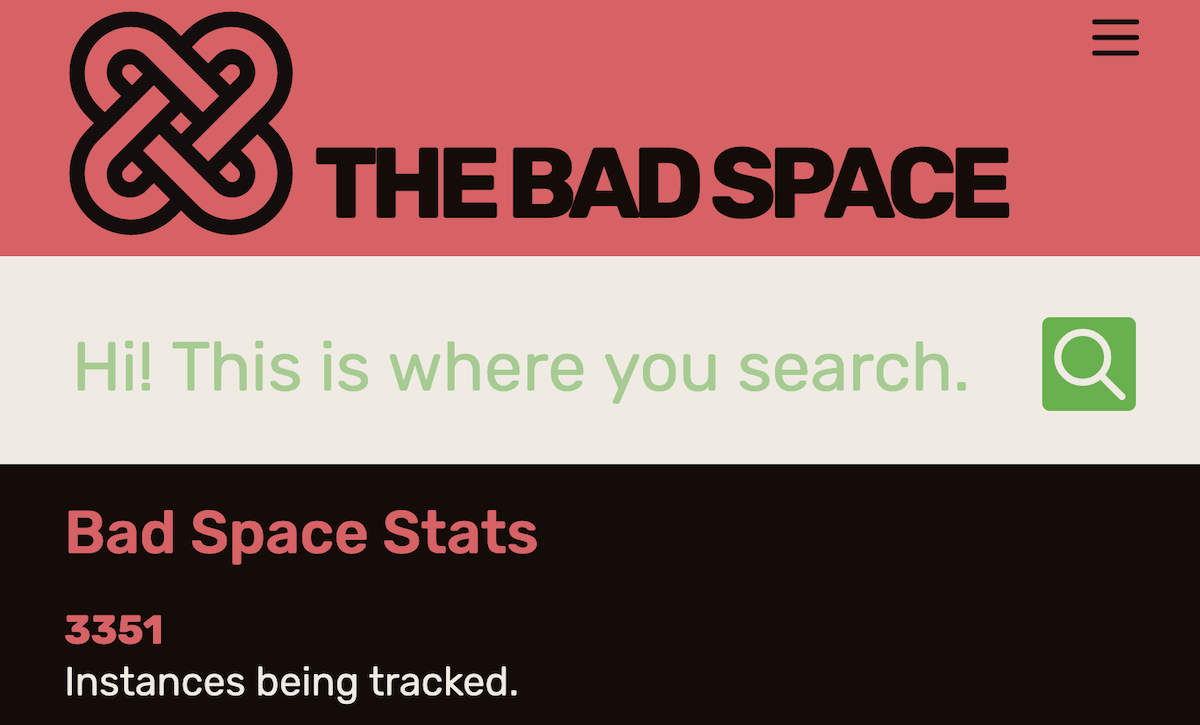

The work-in-progress version of The Bad Space's web site (currently at tweaking.thebad.space provides a web interface that makes it easy to look up an instance to see whether concerns have been raised about its moderation, and an API (application programming interface) making the information available to software as well. The Bad Space currently has over 3300 entries – roughly 14% of the 24,000+ instances in today's fediverse. Entries are added using the blocklists of multiple sources as input. Here's how the about page describes it:

"The Bad Space is a collaboration of instances committed to actively moderating against racism, sexism, heterosexism, transphobia, ableism, casteism, or religion.

These instances have permitted The Bad Space to read their respective blocklists to create a composite directory of sites tagged for the behavior above that can be searched and, through a public API, can be integrated into external services."

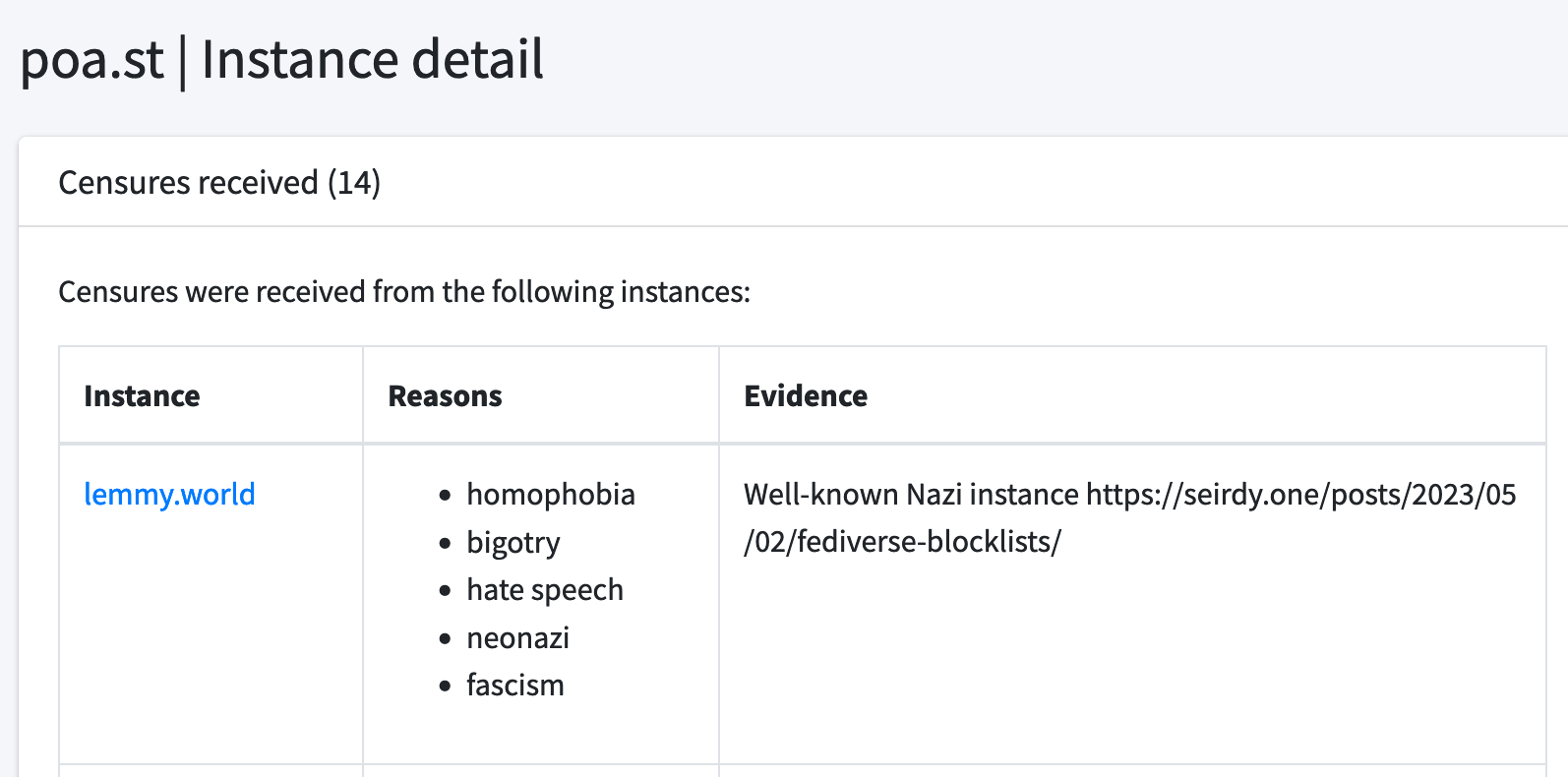

An instance appears on The Bad Space if it's on the blocklists of at least two of the sources. The web interface and API make it easy to see how many sources have silenced or defederated an instance. Other information potentially available for each instance includes a description and links to screens for receipts; screens are not currently available on the public site, and only a subset of instances currently have descriptions. Here's what the page for one well-known bad actor looks like.

The Bad Space also provides downloadable "heat rating" files, showing instances that have been acted on by some percentage of the sources. As of late November October, the 90% heat rating lists 131 instances, and has descriptions for the vast majority. The 50% heat rating lists 650+, and the 20% heat rating (2 or more) has over 1600.

FSEP

The Federation Safety Enhancement Project (FSEP) requirements document (authored by Ro, and funded by Nivenly Foundation via a direct donation Nivenly board member Mekka Okereke) provides a couple of examples of how The Bad Space could be leveraged to improve safety on the fediverse.8 One is a tool that gives individual users the ability to vet incoming connection requests to validate that they are not from problematic sites.

This matters from a safety perspective because if somebody follows you they can see your followers-only posts. People who value their privacy – and/or are the likely targets of harassment or hate speech and don't want to let just anybody follow them – can turn off the "Automatically accept new followers" option in their profile if they're using the web interface (although Mastodon's default app doesn't allow this, another great example of Mastodon not giving people tools to protect each other). FSEP goes further by providing users with information about the instance the follow request is coming from – useful information if they're coming from an instance that has a track record of bad behavior.

The other tools discussed in FSEP help admins manage blocklists, and address weaknesses in current fediverse support for blocklists.9 As Blocklists in the fediverse discusses at length, blocklists can have significant downsides; but in the absence of other good tools for dealing with harassment and abuse, the fediverse currently relies on them. FSEP's "following UI" is a great example of the kind of tool that complements blocklists, but the need isn't likely to go away anytime soon.

While FSEP's proposed design allows blocklists from arbitrary sources, the implementation plan proposed initially using The Bad Space to fill that role in the minimum viable product (MVP).10 But sometimes products never get beyond the MVP. So what would happens if FSEP gets implemented, and then adopted as a default by the entire fediverse, and never gets to the stage of adding another blocklist?

Realistically, of course, there's no chance this will happen. The Bad Space includes mastodon.social on its default blocklist – and mastodon.social is run by Mastodon gGmbH, who also maintains the Mastodon code base. Mastodon's not going to adopt a default blocklist that blocks mastodon.social, and Mastodon is currently over 80% of the fediverse. So The Bad Space isn't going to get adopted as a default by the entire fediverse.

But what if it did?????????

A bug leads to messy discussions, some of which are productive

The alpha version of The Bad Space had been available for a while (I remember looking up an instance on it early in the summer after an unpleasant interaction with a racist user), and the conversations about it were relatively positive. The FSEP requirements doc was published in mid-August, and got some feedback, but there wasn't a lot of broad discussion of it. That all changed after the mid-September bug, which led to dozens of sites temporarily getting listed on The Bad Space by mistake – including girlcock.club (an instance run by trans women for trans folk) and tech.lgbt.

Of course when it was first noticed nobody knew it was a bug. And other trans- and queer-friendly sites also appeared on the The Bad Space. Especially given the ways Twitter blocklists had impacted the trans community, it's not surprising that this very quickly led to a lot of discussion.

Even though nobody was using The Bad Space as a blocklist at the time, the bug certainly highlights the potential risks of using automatically-generated blocklists without double-checking. If an instance had been using "every instance listed on The Bad Space" as a blocklist, then thousands of connections would have been severed without notice – and instance-level defederation on Mastodon currently doesn't allow connections to be re-established if the defederation happens by mistake, so it would have been hard to recover.

Sometimes, messiness can be productive

Ro quickly acknowledged the bug and started working on a fix. A helpful admin quickly connected with the source that had limited girlcock.club because of "unfortunate branding" and resolved the issue – and the source was removed from The Bad Space's "trusted source" list. A week later Ro deployed a new version of the code that fixed the bug, and soon after that implemented UI improvements that provide additional information about how many of the sources have take action against a specific instance and which actions have been taken.

From a software engineering perspective, this kind of messiness can be productive. Many people found ways to raise questions about, criticisms of, and suggestions for improvements to FSEP and The Bad Space without saying racist or anti-trans things. dclements' detailed questions on github are an excellent example, and I saw lots of other good discussion in the fediverse as well.

Discussions about bugs can highlight patterns10 and point to opportunities for improvements. For example, bugs aren't the only reason that entries are likely to appear on blocklists by mistake; other blocklists have had similar problems. What's a good appeal process? How to reduce the impact of mistakes? In a github discussion, Rich Felker suggested that blocklist management tools should have safeguards to prevent automated block actions from severing relationships without notice. If something like that is implemented, it'll help people using any blocklist.

But as interesting as the software engineering aspects are to some of us, much more of the discussion focused on the question of whether the presence of multiple queer and trans instances on The Bad Space reflect bias – or transphobia. As Steps towards better blocklists discusses, these kinds of questions are important to take into account on any blocklist – just as biases against Black, Indigenous, Muslim, Jewish, and disabled people need to be considered (as well as intersectional biases). It's a hard question to answer!

In some alternate universe this too could have been a mostly productively messy discussion. It really is a hard problem – and not just for blocklists, for recommendation systems in general – and the fediverse is home to experts in algorithmic analysis and bias like Timnit Gebru and Alex Hanna of Distributed AI Research Center, Damien P. Williams, Emily Bender as well as lots people with a lot of first-hand experience.

But alas, in this universe today's fediverse is not particularly good at having discussions like this.

Sometimes messiness is just messy

It might still turn out that this part of this discussion becomes productive ... for now let's just say the jury's out. It sure is messy though. For example:

- racists (including folks who have been harassing Ro and other Black people for years) taking the opportunity to harass Ro and other Black people

- a post that tech.lgbt's Retrospective On thebad.space Situation describes as being made in a state of "unfettered panic" left out "various critical but obscure details" and "unwittingly provided additional credibility" for the racist harassment campaign.

- waves of anti-Black and anti-trans language – and racialized disinfo and racialized misinfo – sweeping though the fediverse.

- a distributed denial of service (DDOS) attack targeting Ro's site and The Bad Space).

- instances (including some led by trans and queer people) who saw The Bad Space as a self-defense tool for Black people and Ro and other Black people as the target of racist harassment defederating from instances where moderators and admins allowed, participated in, or enabled attacks – which people who saw The Bad Space as "pure concentrated transphobia" took as unjust and an attempt to isolate trans and queer people.

It still isn't clear who was behind the DDOS and anonymous and pseudonymous racialized disinfo. For the DDOS, my guess is that it was some of the people who harassed Play Vicious in the past and/or nazis, terfs, and white supremacists trying to drive wedges between Black people and trans people and block an effort to reduce racism in the fediverse. With the DDOS attack, for example, they were probably hoping that Black supporters of The Bad Space would blame trans critics, and the trans critics would blame Ro or his supporters for a "false flag" operation to garner sympathy for his cause. But who knows, maybe it really was over-zealous critics – or channers doing it for the lulz.

Nobody's perfect in situations like this

In fraught and emotional situations like this, it's very easy for people to say things that echo racist and/or transphobic stereotypes and dogwhistles, come from a place of privilege and entitlement, embed double standards, or reflect underlying racist assumptions that so many of us (certainly including me!) have absorbed without realizing.

The anti-trans language I saw came from a variety of sources: supporters of The Bad Space making statements about trans critics or trans people in general, critics attacking (or erasing) trans supporters, and opportunistic anti-trans bigots. Calling white trans people out on their racism isn't anti-trans; using dogwhistles, stereotypes, or other problematic language is, and so is intentionally misgendering. Suggesting that trans supporters of The Bad Space are ignoring the potential harms to trans people isn't anti-trans; suggesting that trans people who support The Bad Space "aren't really trans" (or implying that no trans people support The Bad Space) is, and so is intentionally misgendering or calling somebody a "theyfab".

By contrast, the anti-Black langauge I saw was primarily from people opposing The Bad Space (impersonating a supporter) – although opportunistic racist bigots got involved as well. Some was blatant, although not necessarily intentional: stereotypes, amplifying false accusations, dogwhistles and veiled slurs. Another common form was criticisms of The Bad Space that don't acknowledge (or just pay lip service to) Black people's legitimate need to protect themselves on the fediverse, reflecting a racist societal assumption that Black people's safety has less value than white people's comfort. Black people (including Black trans, queer, and non-binary people) have been the target of vicious harassment for years on Mastodon and the fediverse, so no matter the intent, accusations that Ro or The Bad Space are causing division and hate ignore the fediverse's long history of whiteness and racism – and blame a Black person or Black-led project.

And some people managed to be anti-Black and anti-trans simultaneously. One especially clear example: a false accusation about a Black trans person, inaccurately claiming they had gotten a white person fired for criticizing The Bad Space.11 A false accusation against a Black person is anti-Black; a false accusation against a trans person is anti-trans; a false accusation against a Black trans person is both.

Just as in past waves of intense anti-Blackness in the fediverse, one incident built on another. The false accusation occurred after weeks of anti-Black harassment by multiple people on the harasser's instance (with the admin refusing to take action). When the Black trans person who had been falsely accused made a bluntly worded post warning the harassers to knock it off or there would be consequences, the harassers described it as an unprovoked death threat – and demanded that other Black and trans people criticize the Black trans person who was trying to end the harassment. It's almost like they think Black trans people don't have the right to protect themselves! And Black people are stereotypically associated with danger and violence, so it's not surprising many who only saw the decontextualized claim of a "death threat" assumed it was the whole story and proceeded to amplify the anti-Black, anti-trans framing.

Nobody's perfect in situations like this, and while some people making unintentionally unfortunate anti-trans or anti-Black statements acknowledged the problems and apologized, you can't unring a bell. And many didn't acknowledge the problems or apologize, or apologized but continued making unfortunate statements, at which point you really have to question just how "unintentional" they were.

These discussions aren't occurring in a vacuum

"Until we admit that white queers were part of the initial Mastodon "HOA" squad that helped run the initial Black Twitter diaspora off the site - and are also complicit in the abuse targeted at the person in question here - we're not going to make real progress."

– Dana Fried, September 13

The fediverse has a long history of racism, and of marginalizing trans people; see Dr. Johnathan Flowers' The Whiteness of Mastodon or my Dogpiling, weaponized content warning discourse, and a fig leaf for mundane white supremacy, The patterns continue ..., and Ongoing contributions – often without credit for some of it. And some of the racism has come from white queer and trans people. Margaret KIBI's The Beginnings, for example, describes how soon after content warnings (CWs) were first introduced in 2017

"this community standard was weaponized, as white, trans users—who, for the record, posted un‐CWed trans shit in their timeline all the time—started taking it to the mentions of people of colour whenever the subject of race came up."

And the fediverse is still dealing with the aftermath of the Play Vicious episode. Here's how weirder.earth's Goodbye Playvicious.social statement from early 2021 describes it:

"Playvicious was harrassed off the Fediverse.... The Fediverse is anti-Black. That doesn't mean every single person in it is intentionally anti-Black, but the structure of the Fediverse works in a way that harrassment can go on and on and it is often not visible to people who aren't at the receiving end of it. People whom "everyone likes" get away with a ton of stuff before finally *some* instances will isolate their circles. Always not all. And a lot of harrassment is going on behind the scenes, too."

As Artist Marcia X says in Ecosystems of Abuse,

“Misgendering, white women/femmes making comments to Black men that make them uncomfortable, the politics of white passing people as they engage with darker folks, and slurs as they are used intracommunally—these are not easy topics but at some point, they do become necessary to discuss.”

Also: Black trans, queer, and non-binary people exist

"[T]he Black vs. Trans argument really pisses me off because it erases Black Trans people like me, but is also based on the assumption of Trans whiteness"

– Terra Kestrel, September 12

Black trans, queer, and non-binary people are at the intersection of the fediverse's long history of racism and of marginalizing trans people – as well as marginalization within queer communities and in society as a whole. One way this marginalization manifests itself is by erasure. How many of the people criticizing The Bad Space as anti-trans, or framing the discussions is pitting Black people's need to protect themselves as in tension with trans people's concern about being targeted, even acknowledged the existence of Black trans people?

"I think if you included Black trans folk and anti-racist white trans folk in your list of "trans folk to listen to," you'd have a more complete picture, and you'd understand why there are so few Black people on the Fediverse."

– Mekka Okereke, September 13

Black trans, queer, and non-binary people have lived experience with anti-Blackness as well as transphobia. They also have lived experience with intersectional and intra-community oppressions: racism and transphobia in LGBTQIA2S+ communities, transphobia and colorism in Black communities. And they're the ones who are most affected by transphobia in society. For example, trans people in general face a significantly higher risk of violence – and over 50% of the trans people killed each year are Black. Trans people on average don't live as long as cis people – and Black trans and non-binary people are significantly more likely to die than White trans and non-binary people.

Of course, no community is monolithic, and there are a range of opinions on Ro and The Bad Space from Black trans, queer, and non-binary people. That said, most if not all the Black trans, queer, and non-binary people I've seen expressing opinions publicly have expressed support for Ro and his efforts with The Bad Space, while also acknowledging the need for improvements.

"To be completely honest, any discussions about transphobia and the recent meta?

That has to come from Black trans and nonbinary folks."

– TakeV, September 16

Notes

2 Today, Mastodon has by far the largest installed base in the fediverse but (as I'll discuss in the upcoming Mastodon: the partial history continues) is likely to lose its dominant position. Other platforms like Streams, Akkoma, Bonfire, and GoToSocial have devoted more thought to privacy and other aspects of safety, and big players are getting involved – like WordPress, who recently released now offers official support for ActivityPub.

That said, The Bad Space and FSEP also fit in well with the architectural vision new Mastodon CTO Renaud Chaput sketches in Evolving Mastodon’s Trust & Safety Features, so will be useful for instances running Mastodon as well. Mastodon – or a fork – has an opportunity to complement these tools by with giving individuals more ability to protect themselves, shifting to a "safety by default" philosophy, adding quote boosts, and integrating ideas from other decentralized networks like BlackSky.

3 From this perspective, the reaction to The Bad Space is an interesting complement to the multi-year history of Mastodon's refusal to support local-only posts, a safety tool created by trans and queer people that allows trans and queer people (and everybody else) to better protect themselves. Hey wait a second, I'm noticing a pattern here! Does Mastodon really prioritize stopping harassment? has more -- although note that the Glitch and Hometown forks of Mastodon, and most other fediverse platforms, do support local-only posts.

4 OK it's not really coincidental. As Disinfo Defense League points out, using wedge issues to divide groups is a common tactive of racialized disinformation.

5 Thanks to Octavia con Amore for pointing out to me the erasure of trans people who are neither white nor Black from so many of these dicussions.

6 There is currently no footnote #6 or #7. Ghost, the blogging/newsletter software I'm using, doesn't have a good solution for auto-numbering footnotes unless you write your whole post in Markdown, and it's a huge pain to manually edit them, so my footnotes often wind up very strange-looking.

8 Nivenly's response in the github Discussion of the FSEP proposal, from mid-September, has a lot of clarifications and context on FSEP, and Nivenly's October/November update has the current status of FSEP:

Unfortunately due to a few factors, including the unexpected passing of our founder Kris Nóva days after the release of the product requirements document as well as the author and original maintainer Ro needing to take a step back due to a torrent of racism that he received over The Bad Space, the originally planned Q&A that was supposed to happen shortly after FSEP was published did not have the opportunity to happen.... The status of this project is on hold, pending the return of either the original maintainer or a handoff to a new one.

9 From the FSEP document:

- Allowing blocklists to be automatically imported during onboarding dramatically reduces the opportunity to be exposed to harmful content unnecessarily.

- The ability to request an updated blocklist or automate the process to check on its own periodically.

- Expand blocklist management by listing why a site is blocked, access to available examples, and when the site was last updated.

These all address points Shepherd characterizes in The Hell of Shared Blocklists as "vitally important to mitigate harms".

10 For example: The Bad Space mid-September bug resulted in instances getting listed on The Bad Space if even one of sources had taken action against them; the correct behavior is that only sources with two or more actions should be listed. So the similarity of the bug's effect on girlcock.club and tech.lgbt with the issue with Oliphant's now-discontinued unified max list Seirdy discusses in Mistakes made reinforces that blocklist "consensus" algorithms that only require a single source are likely to affect sex-positive queer space so should be avoided.

11 Why am I so convinced that it was a false accusation? For one thing, the accuser admitted it: "I fucked up." Also, facts are facts. Nobody had been fired. Two volunteers had left the Tusky team, and both of them said that it was unrelated to the Black trans person's comment.

The false accuser says it wasn't intentional. They just happened to stumble upon a post by a Black trans person who had blocked them, flipped out (because tech.lgbt was being held accountable for the post I mentioned above where their moderator had ignored crucial details and added credibility to a racist harassment against a Black person), and thought the situation was so urgent that they needed to make a post that (in their own description) "rushed to conclusions" and "speculated on what I couldn't see under the hood" and was wrong.

Part 4

Coming soon

Part 5

Compare-and-contrast: Fediseer, FIRES, and The Bad Space

It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds went into detail on one tool designed to help address the lack of safety in Mastodon and today’s fediverse's and move beyond today's fediverse's reliance on blocklists. In this installment in the series, I'll compare and contrast The Bad Space with some other emerging somewhat-similar projects.

- Fediseer is another instance catalog, including endorsements as well as negative judgments about instances.

- FIRES (an acronym for Fediverse Intelligence Recommendations & Replication Endpoint Server) is infrastructure for moderation advisories and recommendations.

One big contrast is that The Bad Space is the only one of these projects with an explicit focus on protecting marginalized people – and, not so coincidentally, the only one of the projects that's done in collaboration only with instances committed to actively moderating against racism, transphobia, ableism, etc. As I'll discuss in the next few sections, haht's far from the only difference ... but it's a critical one, and I'll return to it in the final section of this article.

On the other hand, there are also some important similarities, especially at the architectural level:

- a separation between a database (or catalog) of information about instances and moderation decisions about whether to suspend or silence them. Blocklists, by contrast, explicitly embed a moderation decision. As The Bad Space's "heat maps" (which can be used as blocklists) illustrate, it's possible to use the data in the catalog to generate a blocklist ... but as the FSEP user-focused follower-approval tool illustrates, that's far from the only way to use this information. So all of these projects can help reduce today's fediverse's dependency on blocklists.

- the ability for people or instances can use the software to host their own services. This fits in well with the fediverse's decentralized approach, and at least potentially avoids the problem of concentrating power.

So let's drill down into the details of the these other projects. If you're not familiar with The Bad Space and FSEP, here's a short overview.

Fediseer

"The fediseer is a service for the fediverse which attempts to provide a crowdsourced human-curated spam/ham classification of fediverse instances as well as provide a public space to specify approval/disapproval of other instances."

– The Fediseer FAQ