Compare and contrast: Fediseer, FIRES, and The Bad Space

Part 4 of "Golden opportunities for the fediverse – and whatever comes next"

Join the discussion on the fediverse!

Earlier posts in the series:

- Mastodon and today’s fediverse are unsafe by design and unsafe by default

- Blocklists in the fediverse

- It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds

And here's the table of contents for this installment:

Intro

The Bad Space is only one of the projects exploring different ways of moving beyond the fediverse's current reliance on instance-level blocking and blocklists. It's especially interesting to compare and contrast The Bad Space with two somewhat-similar projects:

- Fediseer is another instance catalog, including endorsements as well as negative judgments about instances.

- FIRES (an acronym for Fediverse Intelligence Recommendations & Replication Endpoint Server) is infrastructure for moderation advisories and recommendations.

Philosophically, one big contrast is FIRES is purely a software infrastructure project; The Bad Space and Fediseer also include a "flagship" site, with data about instances, and a web user interface for individuals. Of course, as the FSEP proposal illustrates, The Bad Space can be a starting point for additional tools, and the same's true for Fediseer, so they can also be used as infrastructure; and others can deploy the software as well, so they're not limited to the flagship. Still, FIRES' focus on infrastructure means that it's a richer platform for building a wide range of tools that fit into existing workflows.

Another equally big contrast is The Bad Space is the only one of these projects with an explicit focus on protecting marginalized people – and, not so coincidentally, the only one of the projects that's done in collaboration only with instances committed to actively moderating against racism, transphobia, ableism, etc. That's not meant as a criticism of Fediseer or FIRES; their developers have also kept threats to marginalized people in mind as part of their broader focus. But they've got a different focus: Fediseer started by focusing on spam, and FIRES is intended as general-purpose infrastructure. As I'll discuss later in the article, this difference in focus has some interesting ramifications.

On the other hand, there are also some important similarities. Most importantly, all of these projects reflect a separation between a database (or catalog) of information about instances and moderation decisions about whether to suspend or silence them. Blocklists, by contrast, explicitly embed a moderation decision. As The Bad Space's "heat maps" (which can be used as blocklists) illustrate, it's possible to use the data in the catalog to generate a blocklist; but as the FSEP user-focused follower-approval tool highlights, that's far from the only way to use this information. So all of these projects can help reduce today's fediverse's dependency on blocklists. That's good!

So let's drill down into the details of these other projects, and then look at the impact of the difference in philosophies.

Fediseer

"The fediseer is a service for the fediverse which attempts to provide a crowdsourced human-curated spam/ham classification of fediverse instances as well as provide a public space to specify approval/disapproval of other instances."

– The Fediseer FAQ

Fediseer started with a focus on spam, which is a huge problem on Lemmy. But spam is far from the only huge moderation problem on Lemmy, so Fediseer's general approval/disapproval mechanism (and the availability of scripts that let it automatically update lists of blocked instances) means that many instances use it to help blocking sites with CSAM (child sexual abuse material) and as a basis for blocklists.

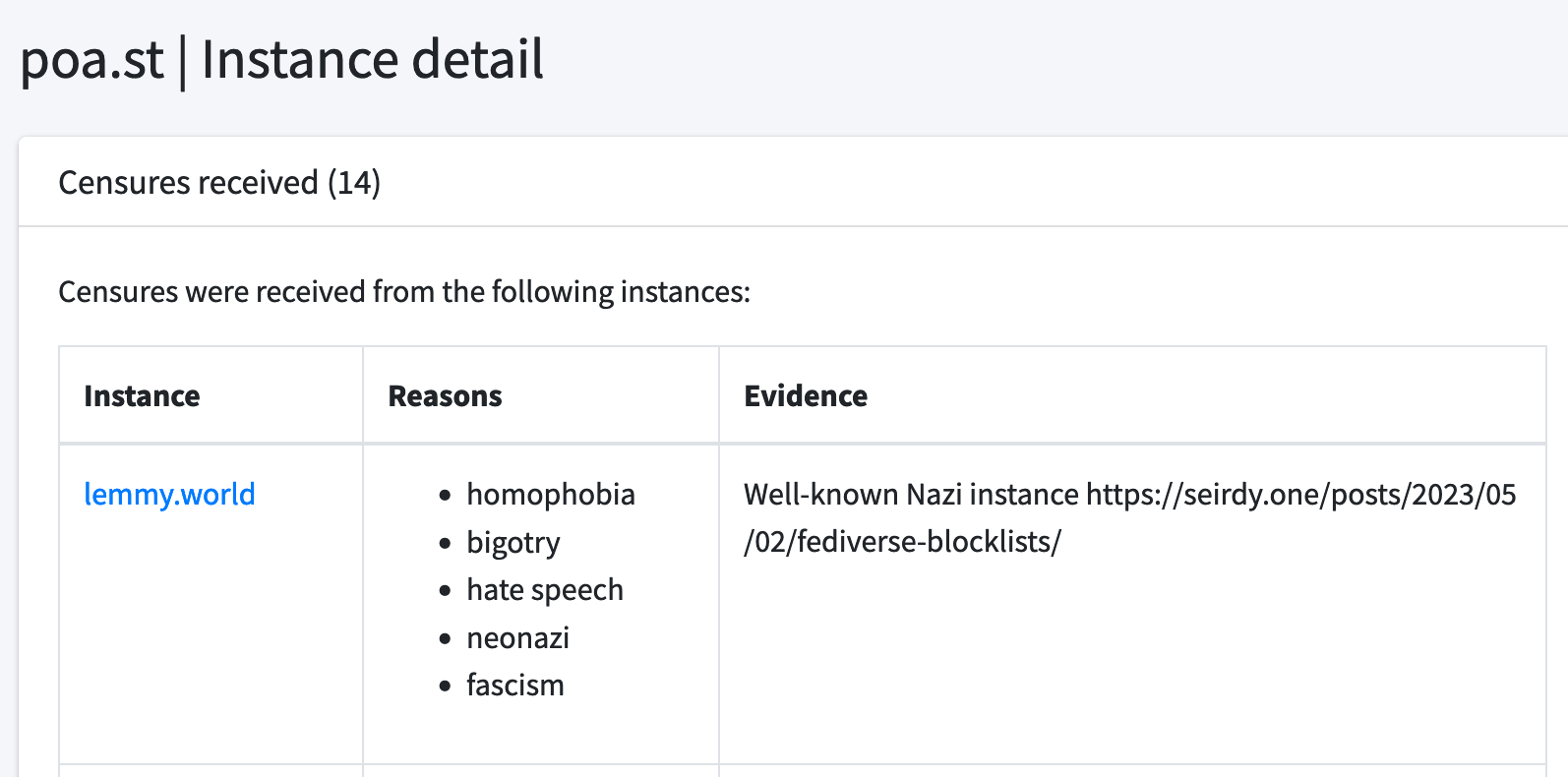

Fediseer uses a "chain of trust" model in which admins of any participating instance guarantee other instances, which allows them to participate. Instances can also provide endorsements ("completely subjective positive judgments") as well as censures (completely subjective negative judgments) and hesitations (milder versions of censures) of other instances. Judgments can include optional reasons (aka tags) and evidence. Including positive as well as negative reviews is a good example of moving beyond relying on blocklists; it's easy to imagine how this could be part of an instance recommendation tool for new users. And Fediseer also allows instances to keep their judgments private (accessible only to their friends) – extremely important functionality from a safety perspective.

Fediseer's UI and API (programming interface) makes it easy to retrieve all the censures given out by one or more instances. So if all the sources for The Bad Space participated in Fediseer, then retrieving judgements only from those sources would (at least in theory) give similar results to The Bad Space.0

Fediseer's UI also makes it easy to see all the judgments that have been made on an instance.

You can also see the judgments an instance has shared and made public. There are also lists of "safelisted" and "suspicious" instances – although this safety and suspiciousness only refers to spamming behavior,

Fediseer is currently playing a very valuable role in the Lemmyverse, including making it much easier for instances to respond quickly when CSAM is detected. The most common objection I've heard to it is the risk that it could become a centralized catalog. That would be bad, but it's not clear to me that it's a realistic threat – Fediseer founder db0 is explicit that he doesn't want that to happen, and the software is open-source and so can also be used by people creating their own sites as an antitdote to centralization.

On the other hand, some of Fediseer's challenges stem from its "open" approach of allowing all non-spamming instances to participate. For example, what will happen if Fediseer gets popular and harassers starting up one-person instances just to give their targets' instances bad reviews – or give other harassers' instances good reviews? Fediseer does include "mute" and a "restrict" functionality, so there are tools to stop abuse or limit the harms, but as it grows it's not at all clear how this will all actually play out in practice.

Still, Fediseer's at an early stage, so it may well find ways to address these challenges. And the ability for people to host their own version means it can also be used as a basis for organizations – or groups of instances – to set up their own similar site that limits participation.

FIRES

"Our present infrastructure of denylists (née “blocklists”) will only get us so far: we need more structured data so we can make informed decisions, we need more options for moderating than just defederating, and the ability to subscribe to changes in efficient & resumable ways."

– Emelia Smith, October 2023 update

FIRES architect Emelia Smith has a long history of working on trust and safety work in the fediverse (including contributions to Mastodon and Pixelfed as well as moderation work at Switter.at), and describes this project as "thinking two steps ahead of where we are currently." It's at an earlier stage than Fediseer or The Bad Space: Nivenly Foundation announced its sponsorship for FIRES in October (funded, like FSEP, via a direct donation from Nivenly board member Mekka Okereke), and Emelia's currently working on a detailed technical proposal. I've based this description off the information in Emelia's public updates and an early draft of the proposal – so it may well change as the project evolves!

FIRES goes beyond the other tools by supporting a wide range of moderation advisories and recommendations – and providing information about how they've changed over time. The Bad Space records suspensions and silencing; Fediseer supports judgements including endorsements, censures, and hesitations; FIRES is designed to allow for much more precise specifications, for example automatically putting a CW on posts from a site (functionality supported by Akkoma and Pleroma), rejecting media, or allowing federation. And FIRES provides a log of how recommendations have changed, which is particularly important for dealing with situations where instances have cleaned up their act (so past decisions to block or silence potentially should be revisited).

Another important distinction is that FIRES' recommendations and advisories aren't necessarily limited to instances. As I mentioned in What about blocklists for individuals (instead of instances)?, it's kind of surprising that Twitter-like blocklists and tools like Block Party haven't emerged yet on the fediverse. My guess is that's likely to change in 2024, so this is a great example of thinking ahead. As Johannes Ernst mentions in Meta/Threads Interoperating in the Fediverse Data Dialogue Meeting yesterday, it might well be "helpful for Fediverse instances (including Threads) to share reputation information with other instances that each instance might maintain on individual ActivityPub actors for its own purposes already." FIRES could be a good mechanism for this whether or not Threads is involved.

Finally, unlike The Bad Space and Fediseer, FIRES doesn't have a web user interface. Instead, it's intended as the basis for small servers that provide data to various consumers – including tools with their own user interfaces, either for users or instance admins. Of course, many infrastructure projects wind up incorporating tools with user interfaces, so this might not be a long-term difference. In the short term, though, it's a choice that makes it easier to focus on the infrastructure aspects.

A difference in philosophies

From a software engineering perspective, FIRES' general infrastructure could well serve as the underpinnings for a re-implementation of Fediseer and The Bad Space – providing additional functionality like more precise information about recommendations and history. Similarly, The Bad Space could be re-implemented (with a far less colorful user interface) as an installation of Fediseer where the only participants are The Bad Space's current sources, and only censures and hesitations are tracked. And while the three projects currently have somewhat different APIs, there's no reason they couldn't converge on a standard – which would allow tools built on top of them to be interoperable. So when taken together, the projects clearly highlight some similar thinking.

But those similarities are only part of the story. There's also the big contrast I discussed at the beginning of this article: The Bad Space, unlike the other projects, has an explicit focus on protecting marginalized people. At the risk of repeating myself: I certainly don't mean this as a criticism of either of the approaches! Just as it's healthy to have multiple independent implementations exploring different variations on functionality or user interface, it's healthy to have different philosophies. And since The Bad Space is open-source software, it could also be adopted by racists and transphobes who set up their own individual instance.

Still, it's a very interesting contrast. Here's one example of how it manifests itself on The Bad Space and Fediseer's flagship sites:

- berserker.town's description on The Bad Space is currently "Hate speech, Federating with 'free speech' instances, hosting ableist, transphobic, fascist content, Edgelord", and all of The Bad Space's sources taken action against it – not surprising since it's on Seirdy's, gardenfence's, and Oliphant's Tier-0 blocklists).

- berserker.town's Fediseer page, by contrast, has endorsements including "active moderators," "cool admin," and "respectful users" as well as censures including "sealioning," "harassment," and "transphobia." Opinions differ!

Of course, because Fediseer API calls are filtered by specific reference instances, the specific information somebody gets about berserker.town depends on what instances they're using as a reference.

- If your reference is lemmy.blahaj.zone (which describes itself as "very protective of our minority members and bigotry of any variety will be squashed with great prejudice"), you'll see a censure.

- If your reference is qoto.org (whose about page includes "we won’t censor unpopular ideas and statements"), you'll see an endorsement.

One way to look at this is by crowdsourcing from a larger crowd (anybody running an instance, as opposed to The Bad Space's small number of sources), Fediseer provides more recommendations that are useful to a broader range of people – which could well lead to broader adoption. As db0 said in a discussion of an earlier draft of this article,

"My thought is that the "bad" aspect of the fediseer open design (the potential to platform bigots) is minimized by the reference-based model and overshadowed by the benefits of being able to provide the tbs functionality for a wider audience."

People on instances that show up on The Bad Space may well be more accepting of Fediseer because it presents both sides, including endorsements of their instances as well as critiques. This is epecially true for racists and "freeze peach" absolutists who hate The Bad Space because it focuses on letting people protect themselves from racist instances, and people who have critiqued The Bad Space during the recent messy discussions. Fediseer's also better suited than The Bad Space for people who want to find instances with "cool admins" even if they're widely blocked for harassment and transphobia.

But then again, this value-neutral "open" approach means platforming the opinions of nazis, white supremacists, terfs, racists, and misogynists (as long as they avoid engaging in hate speech or harassment on Fediseer). From the perspective of marginalized people these groups target, "more recommendations" isn't necessarily better, and curated systems like The Bad Space may well be more attractive. As Fediseer expands outside the Lemmyverse, how many instances moderated from an anti-racist perspective will decide to participate?

Another way this difference in philosophy manifests itself:

- The Bad Space provides narrowly-focused information (to individuals as well as admins) about thousands of instances that cause harm, so most of the value goes to marginalized people who would otherwise be targeted by those instances.

- Fediseer provides much broader information – anti-spam, endorsements, and instances who some see as moderating overly-agressively as well as instances that cause harm – so a smaller percentage of the value goes to marginalized people. And fediseer chose not to leverage existing blocklist and #Fediblock data, so has information about a smaller number of instances (hundreds rather than thousands. Since there's less data available, it provides less protection from instances targeting marginalized people.

- FIRES is pure infrastructure, so relies on intermediaries to deliver value to admins and end users. Of course intermediaries might choose to prioritize protecting marginalized users, but then again they might not. FIRES itself takes no stance either way.

And yet another example relates to the question of how to ensure that marginalized perspectives are taken into account in the information that's provided? For The Bad Space, it's by the choice of sources: instances that moderate from an anti-oppressive perspective, most of which are led by trans and queer people. This certainly isn't a perfect answer but at least it's an answer! For FIRES and Fediseer, by contrast, this question is basically outside of the scope of the project – it's up to whoever's querying Fediseer or building tools on FIRES infrastructure to either incorporate marginalized perspectives or not, and there isn't any functionality to make it easier or harder to do so.

Looking forward

The Bad Space and Fediseer both have some initial traction, and as FIRES moves forward I'd expect it to get adoption as well. Even though there are already several decent solutions for instances and individuals that just want a “worst-of-the-worst” blocklist (including Seirdy’s FediNuke, gardenfence, and Oliphants’ Tier0), but after the messy discussions around The Bad Space and FSEP there's broad awareness of the limitations and downsides of relying too much on instance-level blocklists. Even in their current form, these projects have a lot of value. For example, individuals who are at risk because their instance's blocklists don't protect them are very likely to find The Bad Space quite useful, for screening follow requests and as their own personal blocklist.

Over time, it'll be interesting to see how the projects evolve and what tools are built on top of them. The projects have enough similarities that there are some major potential synergies – and are different enough that they're likely to prove valuable in different ways.

Of course, instance catalogs and reputation systems are only one aspect of the improvements that are needed to create a safer fediverse. I'll discuss some of the others in the next installment of the series, tentative titled Steps to safer fediverses. Also still to come: a discussion of the golden opportunities for the fediverse – and whatever comes next.

Some sneak previews:

But these tools are only the start of what's needed to change the dynamic more completely. To start with, fediverse developers, "influencers," admins, and funders need to start prioritizing investments in safety. The IFTAS Fediverse Moderator Needs Assessment Results highlight one good place to start: provide resources for anti-racist and intersectional moderation. Sharing and distilling "positive deviance" examples of instances that do a good job, documentation of best practices, training, mentoring, templates for policies and process, workshops, and cross-instance teams of expert moderators who can provide help in tricky situations. Resources developed and delivered with funded involvement of multiply-marginalized people who are the targets of so much of this harassment today are likely to be the most effective.

Plenty of people in today's fediverse are just fine with its white-dominated and cis-dominated power structure – or say they want something different but in practice don't act that way. So maybe we'll see a split, with a region of the fediverses moving in an anti-racist, pro-LGBTAIQ2S+ and "safety by default" direction. As I said about another potential split in There are many fediverses, if it happens, it'll be a good thing.

Or who knows, maybe we'll see a bunch of anti-racist and pro-LGBTAIQ2S+ instances saying fine, whatever, bye-bye fediverse, it's time to do something else. If it happens, that too will be a good thing.

Notes

0 Assuming that suspending is treated as a censure and silencing as a hesitation.