Mastodon and today's ActivityPub Fediverse are unsafe by design and unsafe by default

Part 1 of "Golden opportunities for the fediverse – and whatever comes next"

The ecosystem of interconnected social networks known as the fediverse has great potential as an alternative to centralized corporate networks. With Apartheid Clyde transforming Twitter into a machine for fascism and disinformation, and Facebook continuing to allow propaganda and hate speech to thrive while censoring support for Palestinians, the need for alternatives is more critical than ever.

But even though millions of people left Twitter in the last two years – and millions more are ready to move as soon as there's a viable alternative – the ActivityPub Fediverse isn't growing.1 One reason why: today's Fediverse is unsafe by design and unsafe by default – especially for Black and Indigenous people, women of color, LGBTQIA2S+ people2, Muslims, disabled people and other marginalized communities.

It doesn't have to be that way. Straightforward steps could make the ActivityPub Fediverse safer – and open up some amazing opportunities.

Then again, there's also a lot resistance to making progress on this, and the ActivityPub Fediverse has a looooong history of racism and transphobia. So maybe the Fediverse will shrug its collective shoulders, continue to be unsafe by design and unsafe by default, and whatever comes next will seize the opportunities.

Over the course of this multi-part series, I'll discuss Mastodon and the ActivityPub Fediverse's long-standing problems with abuse and harassment; the strengths and weaknesses of current tools like instance blocking and blocklists (aka denylists); the approaches emerging tools like The Bad Space and Fediseer take, along with potential problems; paths to improving the situation; how the fediverses as a whole can seize the moment and build on the progress that's being made; and what's likely to happen if the fediverse as a whole doesn't seize the opportunity. It's a lot, so it'll take a few posts! At the end I'll collect it all into a single post, with a revised introduction.3

This first installment has three sections:

- Mastodon is unsafe by design and unsafe by default – and most people in today's Fediverse use Mastodon

- Instance-level federation choices are a blunt but powerful safety tool

- Instance-level federation decisions reflect norms, policies, interpretations, and (sometimes) strategy

Update: There are many fediverses (2024) discusses the terminology I'm now using in general – but there's no need to wallow in the details unless you're into it. A fediverse is a decentralized social network. Different people mean different things by "the Fediverse" (and Definitions of "the Fediverse" goes into a lot more detail for your wallowing pleasure). Since 2018 or so most people have used "the Fediverse" and "the fediverse" as a synonym for the ActivityPub-centric Fediverse (although that may be changing), and that's how I'm using the terms here.

Mastodon is unsafe by design and unsafe by default – and most people in today's Fediverse use Mastodon

The 1.25 million active users in today's ActivityPub Fediverse are spread across more than 20,000 instances (aka servers) running a wide variety of software. On instances with active and skilled moderators and admins it can be a great experience. But not all instances are like that. Some are bad instances, filled with nazis, racists, misogynists, anti-LGBTAIQ2S+ haters, antisemites, Islamophobes, and/or harassers. And even on the vast majority of instances whose policies prohibit racism (etc.), relatively few of the moderators in today's fediverse have much experience with anti-racist or intersectional moderation – so very often racism (etc.) is ignored or tolerated when it inevitably happens.

This isn't new. In a 2022 discussion, Lady described the OStatus Fediverse's late-2016 culture (when Mastodon first started) as "a bunch of channer shit and blatantly anti-gay and anti-trans memes", and notes that "the instance we saw most often on the federated timeline in those days was shitposter.club, a place which virtually every respectable instance now has blocked." clacke notes that "the famous channer-culture and freezepeach instances came up only months before Mastodon's "Show HN"" in 2016. Creatrix Tiara's August 2018 Twitter thread discusses a 2017 racist dogpiling led by an instance hosted by an alt-right podcaster.

But just like Nazis and card-carrying TERFs aren't the only source of racism and transhpobia and other bigotry in society, bad instances are far from the only source of racism, transphobia, and other bigotry in the fediverses. Some instances – including some of the largest, like pawoo.net – are essentially unmoderated. Others have moderators who don't particularly care about moderating from an anti-racist and pro-LGBTAIQ2S+ perspective. And even on instances where moderators are trying do the right thing, most don't have the training or experience needed to do effective intersectional moderation. So finding ways to deal with bad instances is necessary, but by no means sufficient.

This also isn't new. Margaret KIBI's The Beginnings, for example, describes weaponization of content warnings (CWs) back in 2017 where "white, trans users—who, for the record, posted un‐CWed trans shit in their timeline all the time—started taking it to the mentions of people of colour whenever the subject of race came up." Creatrix Tiara's November 2022 Twitter thread has plenty of examples from BIPOC fediversians about similar racialized CW discourse.

"we made this space our own through months of work and a fuckton of drama and infighting. but we did a good enough job that when mastodon took off, OUR culture was the one everybody associated it with"

– Lady, November 2022

In Mastodon's early days of 2016-2017, queer and trans community members drove improvements to the software and developed tools which – while very imperfect – help individuals and instance admins and moderators address these problem, at least to some extent. Unfortunately, as I discuss in The Battle of the Welcome Modal, A breaking point for the queer community, The patterns continue ..., and Ongoing contributions – often without credit, hostile responses from Mastodon's Benevolent Dictator for Life (BDFL) Eugen Rochko (aka Gargron) and a pattern of failing to credit people for their work drove many key contributors away.

Others remain active – and forks like glitch-soc continue to provide additional tools for people to protect themselves – but Mastodon's pace of innovation had slowed dramatically by 2018.8 Mastodon lacks basic safety functionality like Twitter's ability to limit replies (requested since 2018) or make a profile private.9 Not only that, some of the protections that Mastodon does provide aren't turned on by default – or are only available in forks, not the official release – and many have caveats even when enabled. For example:

- while Mastodon does offer the ability to ignore direct messages (DMs) and notifications from people who you aren't following – great for cutting down on harassment as well as spam – that's not the default. Instead, by default your inbox is open to nazis, spammers, and everybody else until you've found and updated the appropriate setting on one of the many settings screens.10

- by default, blocking on Mastodon isn't particularly effective unless the instance admin has turned on a configuration option.11

- by default, all follow requests are automatically approved, unless you've found and updated the appropriate setting on one of the many settings screens. Unless you turn this on, the apparent privacy protections of "followers-only posts" are an illusion: anybody you haven't blocked can add themselves as a follower and see your followers-only posts.

- local-only posts (originally developed by the glitch-soc fork in 2017 and also implemented in Hometown and other forks) give people the ability to prevent their posts from shared with other instances (who might have harassers, terfs, nazis, and/or admins or software that doesn't respect privacy) ... but Rochko has refused to include this functionality in the main Mastodon release.12

- Mastodon supports "allow-list" federation,13 allowing admins to choose whether or not to agree federate with nazi instances; but Mastodon's documentation describes this as "contrary to Mastodon’s mission of decentralization", so by default, all federation requests are accepted.

Other software platforms like Streams, Pixelfed, Akkoma, and Bonfire have put a lot more thought and effort into safety. In a discussion of an earlier version of this article, Streams developer Mike Macgirvin commented "I just never see anybody talking about online safety in our sector of the fediverse, because there's nothing really to talk about." But over 80% of the active users in today's fediverse are on instances running Mastodon software. Worse, some of the newer platforms like Lemmy (a federated reddit alternative) have even fewer tools than Mastodon.

And the underlying ActivityPub protocol the fediverse is built on doesn't design in safety.

"Unfortunately from a security and social threat perspective, the way ActivityPub is currently rolled out is under-prepared to protect its users."

– OcapPub: Towards networks of consent, Christine Lemmer-Webber

"The basics of ActivityPub are that, to send you something, a personPOSTs a message to your Inbox.

This raises the obvious question: Who can do that? And, well, the default answer is anybody."

– Erin Shepherd, in A better moderation system is possible for the social web, November 2022

"A dude saying "if you don't like receiving hate messages from nazis just block them" is baked into the source code."

– Bri Seven, August 2024

Despite these problems, many people on well-moderated instances have very positive experiences in today's fediverse. Especially for small-to-medium-size instances, for experienced moderators even Mastodon's tools can be good enough.

However, many instances aren't well-moderated. So many people have very negative experiences in today's fediverse. For example ...

"During the big waves of Twitter-to-Mastodon migrations, tons of people joined little local servers ... and were instantly overwhelmed with gore and identity-based hate."

– Erin Kissane, Blue skies over Mastodon (May 2023)

"It took me eight hours to get a pile of racist vitriol in response to some critiques of Mastodon."

– Dr. Johnathan Flowers, The Whiteness of Mastodon (December 2022)

"I truly wish #Mastodon did not bomb it with BIPOC during the Twitter migration.... I can't even invite people here b/c of what they have experienced or heard about others experiencing."

– Damon Outlaw, May 2023

Instance-level federation choices are a blunt but powerful safety tool

"Instance-level federation choices are an important tool for sites that want to create a safer environment (although need to be complemented by user-level control and other functionality)."

– Lessons (so far) from Mastodon, originally written May 2017

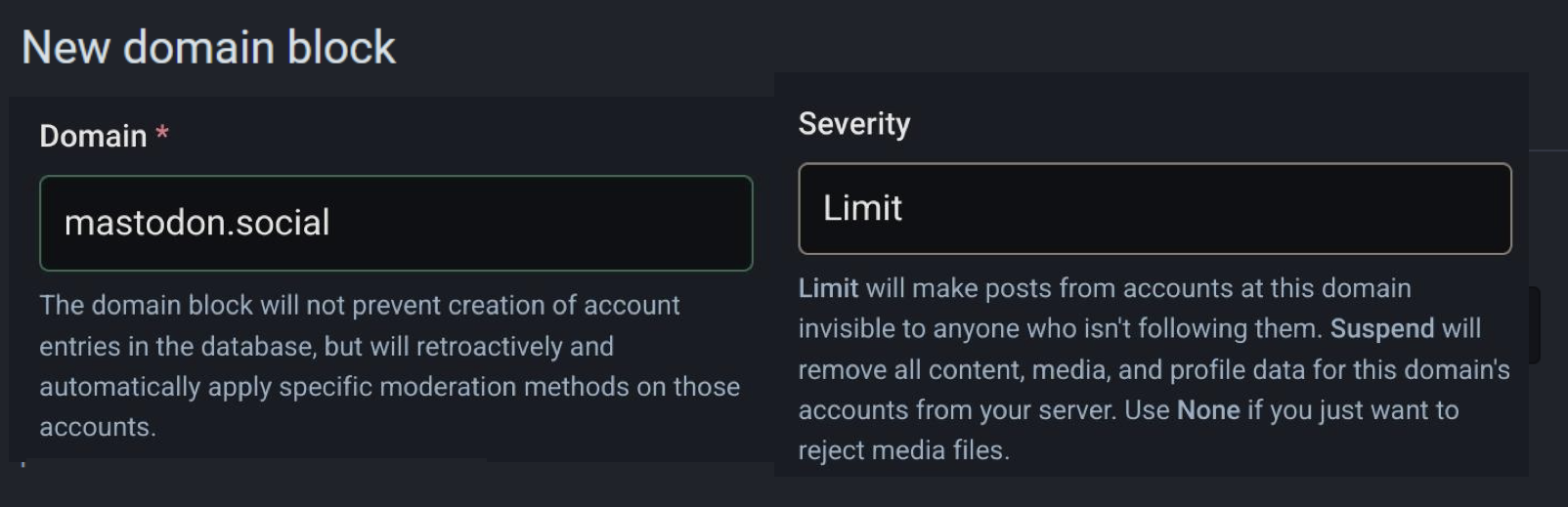

For people who do have good experience, one of the key reasons is a powerful tool Mastodon first developed back in 2017: instance-level blocking, including the ability to

- defederate (aka suspend) another instance, preventing future communications and removing all existing connections between accounts on the two instances

- limit (aka silence) another instance, limiting the visibility of posts and notifications from that instance to some extent (although not completely) unless people are following the account that makes them.

- reject reports from another instance, preventing malicious reporting

- reject media from another instance, to ensure that illegal or objectionable content isn't federated

Terminology note: yes, it's confusing. Different people use different terms for similar things, and sometimes the same term for different things ... and the "official" terminology has changed over the years.

Of course, instance-level blocking is a very blunt tool, and can have significant costs as well. Mastodon unhelpfully magnifies the costs by not giving an option to restore any connections that are severed by defederation,14 not letting people know when they've lost connections because of defederation, and making the experience of moving between instances unpleasant and awkward.

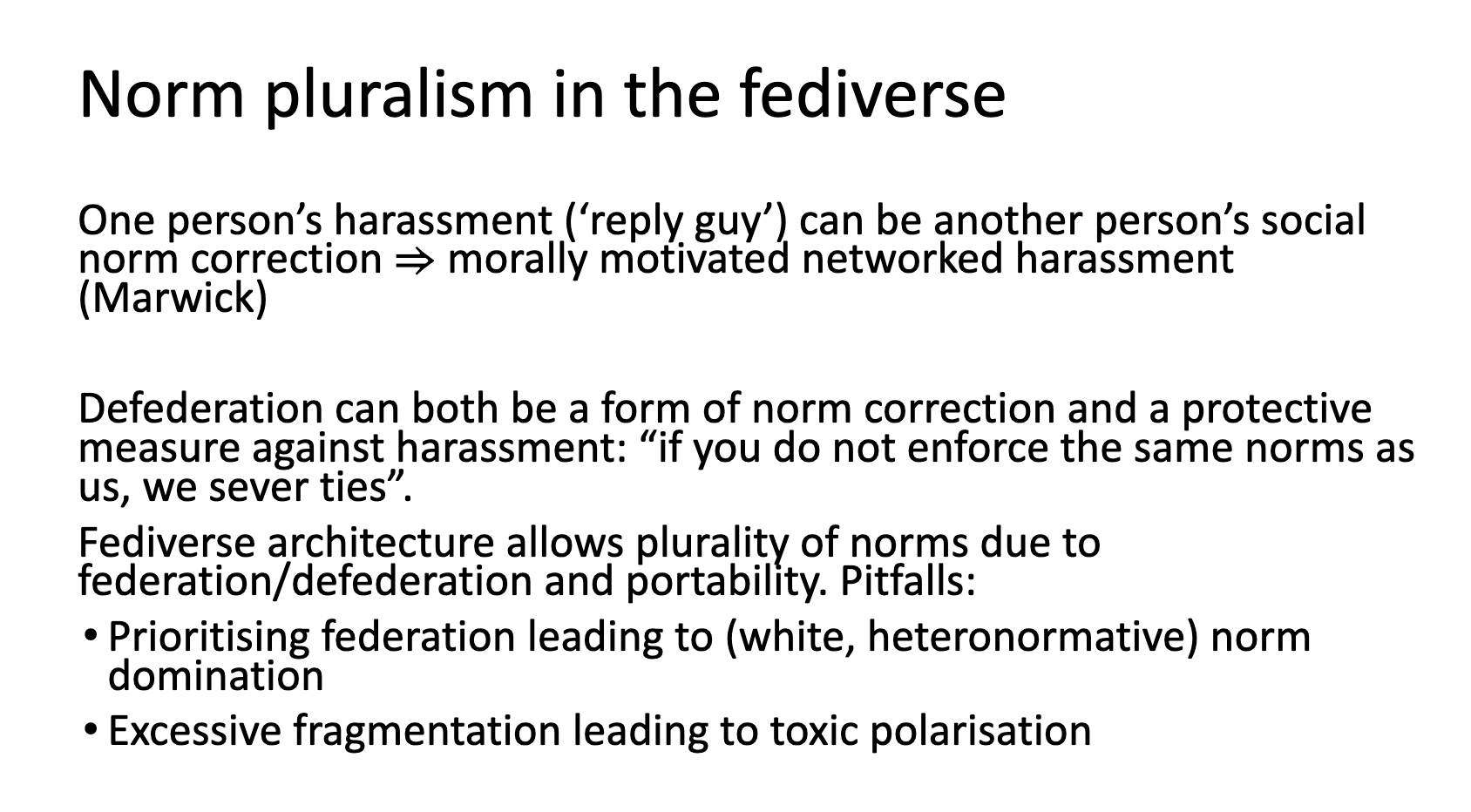

Opinions differ on how to balance the costs and benefits, especially in situations where are some bad actors on an instance as well as also lots of people who aren't bad actors.

Instance blocking decisions often spark heated discussions. For example, suppose an instance's admin makes a series of jokes that some people consider perfectly fine but others consider racist, and then verbally attacks people who report the posts or call them out. Others on their instance join in as well, defending somebody they see as unfairly accused, and in the process make some comments that they think are just fine but others consider racist. When those comments are reported, the moderators (who thinks they're just fine) doesn't take action. Depending on how you look at it, it's either

- a pattern of racist behavior by the admin and members of the instance, failure to moderate racist posts, and brigading by the members of the instance,

- or a pattern of false accusations and malicious reporting from people on other instances

Is defederation (or limiting) appropriate? If so, who should be defederated or limited – the instance with the comments that some find racist, or the instances reporting comments as racist that others think are just fine? Unsurprisingly, opinions differ. In situations like this, white people are more likely to be forgiving of the posts and behavior that people of color see as racist – and, more likely to see any resulting defederation or limiting as a punishment.

Or consider the scenario Mahal discusses on todon.eu, one othat I've heard repeatedly from other moderators as well:

"If you have users* that talk about #Palestine or especially tag out the @palestine and @israel groups (*especially* when they speak positively about Palestine), beware.

These people will get reported by bad-faith right-wingers, neoliberals, *and* genocide apologist leftists.

They will pressure you. They will annoy you. They will do everything they can until you get pissed off and do something about those people, who are then completely powerless against your ability to make them migrate and go through the set-up process again."

If a specific instance is the source of a lot of these bad-faith pressure, blocking reports can help substantially. But if the bad-faith pressure also comes in the form of replies and tags from that instance, when is silencing or blocking appropriate?

I'll delve more into the other scenarios where opinions differ on whether defederation or limiting is or isn't appropriate in the next session. First though I really want to emphasize the value of instance-level blocking.

- Defederating from a few hundred "worst-of-the-worst" instances makes a huge difference.

- Defederating or limiting large loosely-moderated instances (like the "flagship" mastodon.social) that are frequent sources of racism, misogyny, and transmisia15 means even less harassment and bigotry (as well as less spam).

- Mass defederation can also send a powerful message. When far-right social network Gab started using Mastodon software in 2019, most fediverse instances swiftly defederated from it. Bye!!!!!!!

Instance-level blocking really is a very powerful tool.

#FediBlock and receipts

"I noticed that I was not the only femme user who was being targeted. This was a fucking nuisance. So I decided to create a tag,and the thing is that tags kind of feel old school, annoying, not another tag, but then again, what other tools do I have to warn other users besides a tag."

– #Fediblock, a Tiny History, on Artist Marcia X, 2024

When an admin blocks an instance for spreading harassment and hate, they often want to tip off other admins who may well also want to block that instance. Back in 2017, Marcia X created the #FediBlock hashtag, and Ginger helped spread it via faer networks, to make it easier for admins and users to share blocking information. Usually, posts to #FediBlock come with receipts – links or screenshots to posts or site rules that justify the call for widespread blocking – although sometimes the name of the domain is enough.

#FediBlock continues to be a useful channel for sharing this information, although it has its limits. For one thing, anybody can post to a hashtag, so without knowing the reputation of the person making a post to the hashtag it's hard to know how much credibility to give the recommendation. And Mastodon's search functionality has historically been very weak, so there's no easy way to search the #FediBlock hashtag to see whether specific instances have been mentioned. Multiple attempts to provide collections of #FediBlock references (without involving or crediting its creators) have been abandoned; without curation, a collection isn't particularly useful – and can easily be subverted.

So as of today, there's often no good answer to questions like "why has instance X been widely blocked?" or "instance X has been blocked for hate speech, but was it really hate speech or just a moderator of another instance with a grudge?" Long-time admins know about a few low-profile well-curated sites that record some vetted #FediBlock entries – including Seirdy's incredibly valuable Receipts listing, covering most of the worst-of-the-worst. Google and other search engines have partial information as well, but it's all very time-consuming and hit-or-miss. 2023 has seen the emergence of instance catalogs like The Bad Space and Fediseer that collect more of this information, although as I discuss in It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds have also sparked a lot of resistance.

And receipts aren't a panacea. Some of the incidents that lead to instances being blocked can be quite complex, so receipts are likely to be incomplete at best. As Sven Slootweg points out, "a lot of bans/defederations/whatever are not handed out for specific directly-observable behaviour, but for the offender's active refusal to reflect or reconsider when approached about it," which means that to understand the decision "you actually need to do the work of understanding the full context."

In other situations, providing receipts can open up the blocklist maintainer (or people who had reported problems or previously been targeted) to legal risk.

"[W]hen the people who were being harassed provided receipts, they were accused of 'screenshot dunking'. The people who were harassing them sought any reason, however tenuous, to dismiss their receipts or to call into question their credibility or attack and bully them into eventually just not posting receipts. This was deliberate, and it happened extensively, and the result is that many folk on fedi are now understandably reluctant to share receipts because of the abuse and backlash they received for doing it the first time."

– mastodon.art moderator Welsh Pixie, discussing the role of receipts in the Play Vicious harassment

Still, despite their limitations, receipts can be very useful in many circumstances; I (and others) frequently look at to Seirdy's list.

Instance-level federation decisions reflect norms, policies, interpretations, and (sometimes) strategy

For some instances, defederating from Gab was based on norms: we don't tolerate white supremacists, Gab embodies white supremacy, so we want nothing to do with them. For others, it was more a matter of safety: defederating from Gab cuts down on harassment. Some went further: two-level defederation, defederating from any instance that federates with Gab as well as defederating Gab.

Spamming #FediBlock is a good example of a situation where disagreement on a norm relates to Only Brown Mastodon's point above about different views of defederation. Some people see spamming #FediBlock as interfering with a safety mechanism created by a queer Afro-Indigenous woman and used by many inntance admins to help protect people against racist abuse – so grounds for defederation if admins don't take it as action. Others see spamming #FediBlock as a protest against a mechanism they don't like, or just something to do for lulz, so see these defederations as unfair and punitive.

Even when there's apparent agreement on a norm, interpretations are likely to differ. Consider the situation Mahal discusses above. There's wide agreement on the fediverse that antisemitism is bad; as Mahal says, people making real antisemitic comments and resorting to hate speech "absolutely deserve the boot." But what happens when somebody makes a post about the situation in Gaza that Zionist Jews see as antisemitic and anti-Zionist Jews don’t? If the moderators don’t take the posts down, are they being antisemitic? Conversely, if the moderators do take them down, are they being anti-Palestinian? Is defederation (or limiting) appropriate – or is calling for defederation antisemitic? To me, as an anti-Zionist Jew, the answers seem clear;16 once again, though, opinions differ.

And (at the risk of sounding like a broken record) in many situations, moderators – or people discussing moderator decisions – don't have the knowledge to understand why something is racist. Consider this example, from @futurebird@sauropod.win's excellent Mastodon Moderation Puzzles.

"You get 4 reports from users who all seem to be friends all pointing to a series of posts where the account is having an argument with one of the 4 reporters. The conversation is hostile, but contains no obvious slurs. The 4 reports say that the poster was being very racist, but it's not obvious to you how."

As a mod what do you do?

I saw a spectacular example of this several months ago, with a series of posts from white people questioning an Indigenous person's identity, culture, and lived experiences. Even though it didn't include slurs, multiple Indigenous people described it as racist ... but the original posters, and many other white people who defended them, didn't see it that way. The posts eventually got taken down, but even today I see other white people characterizing the descriptions of racism as defamatory.

Discussions often become contentious

So discussions about whether defederation (or limiting) is appropriate often become contentious in situations when ...

- an instance's moderators frequently don't take action when racist, misogynistic, anti-LGBTQ+, antisemitic, anti-Muslim, or casteist posts are reported – or only take action after significant pressure and a long delay

- an instance hosts a known racist, misogynistic, or anti-LGBTQ+ harasser

- an instance's admin or moderator is engaging in – or has a history of engaging in – harassment

- an instance's admin or moderator has a history of anti-Black, anti-Indigenous, or anti-trans activity

- an instance's members repeatedly make false accusations that somebody is racist or anti-trans

- an instance's members try to suppress discussions of racist or anti-trans behavior by brigading people who bring the topics up (or spamming the #FediBlock hashtag)

- an instance's moderators retaliate against people who report racist or anti-trans posts

- an instance's moderator, from a marginalized background, is accused of having a history of sexual assault – but claims that it's a false accusation, based on a case of mistaken identity

- an instance's members don't always put content warnings (CWs) on posts with sexual images for content from their everyday lives17

Similarly, there's often debate about if and when it's approprate to re-federate. What if an instance has been defederated because of concerns that an admin or moderator is a harasser who can't be trusted, and then the person steps down? Or suppose an multiple admittedly-mistaken decisions by an instance's moderators that impacted other instances leads to them being silenced, but then a problematic moderator leaves the instance and they work to improve their processes. At what point does it make sense to unsilence them? What if it turns out the processes haven't improved, and/or more mistakes get made?

Instances ' decisions to preemptively block Meta's new social network Threads is also proved very contentious. On the one hand, as Why block Meta? discusses, there are strong safety- and norms-based arguments for doing so, given Meta's tolerance of anti-trans hate groups and white supremacists, history of illegal behavior, experiments on their users, and contributions to genocide. Why the Anti-Meta Fedi Pact is good strategy for people who want the fediverse to be an alternative to surveillance capitalism makes the case that blocking Meta is also good strategy. On the other hand, the cis guys I quote in Two views of the fediverse see it differently. Dan Gillmor, for example, argues that "preemptively blocking them -- and the people already using them -- from your instance guarantees less relevance for the fediverse." John Gruber goes farther, describing the Anti-Meta Pact as "petty and deliberately insular" and that any instance that blocks Meta will be an "island of misfit loser zealots." And Chris Trottier dismisses the safety argument, suggesting that "if your community can’t survive Meta using ActivityPub, then it doesn’t deserve to exist."

Transitive defederation is especially contentious

Transitive defederation – defederating from all the instances that federate directly or indirectly with a toxic instance – is particularly controversial. Is it grounds for defederation if an instance federates with a white supremacist instance like Stormfront or Gab, or an anti-trans hate instance like kiwiframs? Derek Caelin's Decentralized Networks vs The Trolls quote from mastodon.technology's admin Ash Furrow reflects safety, norms, and strategy:

"Setting up two degrees of separation from Gab is a rare step, but Furrow sees it as a necessary step, since through them negative content might seep into the instance he maintains.

"It’s either the case that they agree and they’re like ‘oh wow, I don’t want to be associated with this at all’ or they see it in sort of free speech abso-

lutionist terms....And, you know, I can kind of see where they’re coming from – and listen, I’m Canadian, I’m not really a very vocal person, I don’t like confrontation – but I’ll tell them, ‘is federating with Gab more impor-

tant than federating with Mastodon.technology?’ It’s a decision that I put in their field.""

As always, opinions differ. Many see federating with an instance that tolerates white supremacists and transhopbia as tolerating white supremacists and transphobes (and thus in conflict with most fediverse instances stated values); others don’t. Some agree that it’s tolerating white supremacists and transphobes but don’t see that as grounds for defederation. Others see it in terms of a no-platform strategy.

An earlier version of this article suggested that "norm-based transitive defederation can be especially contentious," but as the discussions about Meta's Threads highlight, safety-based transitive defederation can also be especially contentious. Erin Kissane's excellent Untangling Threads recommends that people wanting "reasonably sturdy protection" from hate groups on Threads consider being on an instance "that federates only with servers that also refuse to federate with Threads." My own Why just blocking Meta’s Threads won’t be enough to protect your privacy once they join the fediverse similarly focuses on safety benefits. Tim Chambers, however describes providing this reasonably sturdy protection as "the nuclear option." On We Distribute, Sean Tilley says "The problem is that there’s a movement of people who want to block anything and anyone that doesn’t choose to block Threads themselves" and notes that "a lot of people worry that this hysteria could lead to a massive fragmentation of the Fediverse, effectively doing Meta’s job for free."

To be continued!

The discussion continues in

- Blocklists in the fediverse

- It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds

- Compare and contrast: Fediseer, FIRES, and The Bad Space

- Steps to a safer fediverse

- ...

- A golden opportunity for the fediverse – and whatever comes next

Here's a sneak preview of the discussions of blocklists

With hundreds of problematic instances out there, blocking them individually can be tedious and error-prone – and new admins often don't know to do it.. Starting in early 2023, Mastodon began providing the ability for admins to protect themselves from hundreds of problematic instances at a time by uploading blocklists (aka denylists)....

[W]idely-shared blocklists introduce risks of major harms – harms that are especially likely to fall on already-marginalized communities....

It would be great if Mastodon and other fediverse software had other good tools for dealing with harassment and abuse to complement instance-level blocking – and, ideally, reduce the need for blocklists. But it doesn't, at least not yet....

So despite the costs of instance-level blocking, and the potential harms of blocklists, they're the only currently-available solution for dealing with the hundreds of Nazi instances – and thousands of weakly-moderated instances, including some of the biggest, where moderators frequently don't take action on racist, anti-LGBTAIQ2S+, antisemitic, anti-Muslim, etc content. As a result, today's fediverse is very reliant on them.

To see new installments as they're published, follow @thenexusofprivacy@infosec.exchange or subscribe to the Nexus of Privacy newsletter.

Notes

1 According to fedidb.org, the number of monthly active fediverse users has decreased by about 20% since January 2022.

2 I'm using LGBTQIA2S+ as a shorthand for lesbian, gay, gender non-conforming, genderqueer, bi, trans, queer, intersex, asexual, agender, two-sprit, and others (including non-binary people) who are not straight, cis, and heteronormative. Julia Serrano's trans, gender, sexuality, and activism glossary has definitions for most of terms, and discusses the tensions between ever-growing and always incomplete acronyms and more abstract terms like "gender and sexual minorities". OACAS Library Guides' Two-spirit identities page goes into more detail on this often-overlooked intersectional aspect of non-cis identity.

3 Which is why the footnote numbers are currently a bit strange: footnotes 4, 5, 6, 7, and 8 are in the yet-to-be-published revised intro. But then, my footnote numbers are often a bit strange ... I'm sure by the time I'm done there will be footnotes with decimal points in the numbers.3.1

3.1 Like this!

8 Virtually all the issues and obvious next steps I discussed in 2017-18's Lessons (so far) from Mastodon remain issues and obvious next steps today.

9 Bonfire's boundaries support includes the ability to limit replies; Streams has supported limiting replies for years; GoToSocial plans to add this functionality early next year. Heck, even Bluesky has announced plans to add this. emceeaich's 2020 github feature request Enable Twitter-style Reply Controls on a Per-Toot Basis includes a comment from glitch-soc maintainer ClearlyClaire describing some of the challenges implementing this in Mastodon; Claire's Federation Enhancement Proposal 5624 and the discussion under it has a lot more detail.

10 If you're on the web, it's on the Settings page under Preferences/Notifications. In the Mastodon mible app, click on the gear icon at the top right to get to Settings, scroll down to The Boring Zone, click on Account Settings. Once you authorize signing in to your Mastodon account, you get to the Account settings page; then all you need to is click on the menu at the top right and select Preferences, click on the Menu again and select Notifications, and scroll down to Other notification settings. How intuitive!

And there's a caveat here: if this setting's enabled, Mastodon silently discards DMs, which often isn't what's wanted; auto-notification of "I don't accept DMs" and/or the equivalent of Twitter's message requests would make the functionality more useful.

12 That's right: this valuable anti-harassment functionality has been implemented for six years but Rochko refuses to make it broadly avaiable. Does Mastodon really prioritize stopping harassment? has more, and I'll probably rant about it at least one more time over the couse of this series

13 LIMITED_FEDERATION_MODE. And guess what, there's another caveat: you can't combine LIMITED_FEDERATION_MODE with instance-level blocking.

14 As I said a few months ago, describing an incident where an admin defederated an instance and then on further reflection decided it had been an overreaction,

"[A]fter six years why wasn't there an option of defederating in a way that allows connections to be reestablished when the situation changes and refederation is possible? If you look in inga-lovinde 's Improve defederation UX March 2021 feature request on Github, it's pretty clear that it's not the first time stuff like this happened."

And it wasn't the last time stuff like this happened either. In mid-October, a tech.lgbt moderator decided to briefly suspend and unsuspend connections to servers that had been critical of tech.lgbt, in hopes that it would "break the tension and hostility the team had seen between these connections." Oops. As the tech.lgbt moderators commented afterwards, "severing connections is NOT a way to break hostility in threads and DMs."

15 transmisia – hate for trans people – is increasingly used as an alternative to transphobia. More on the use of -misia instead of phobia, see the discussion in Simmons University's Anti-oppression guide

16 For an in-depth exploration of this topic, see Judith Butler's Parting Ways: Jewishness and the Critique of Zionism, which engages Jewish philosophical positions to articulate a critique of political Zionism and its practices of illegitimate state violence, nationalism, and state-sponsored racism.

17 woof.group's guidelines, for example, don't require CW's on textual posts unless "it's likely to cause emotional distress for a general leather audience" – but others may have different standards for what causes emotional distress.

18 Should the Fediverse welcome its new surveillance-capitalism overlords? Opinions differ! has more on various opinions on whether or not to block Meta

Update log

ongoing: minor fixes and additions in response to feedback and as I discover new links

October 2024: change title to specifically refer to ActivityPub Fediverse, update terminology in intro section to start bringing it more in line with There are many fediverses