Social threat modeling and quote boosts on Mastodon

How can Mastodon improve tools for preventing, and defending against, harassment and abuse?

Last updated December 30, 2024 – see the update log for details.

Social threat modeling applies structured analysis to threats like harassment, abuse, and disinformation. You'd think this would happen routinely, but no. A trip down memory lane looks at a couple of high-profile missteps by Twitter and how social threat modeling could have avoided them.

Social threat modeling can also be very useful for Mastodon. The early days features significant innovation like instance blocking and local-only posts; since then, though, the lack of progress has been striking. As of early 2023, Mastodon lacks some of Twitter's basic anti-harassment functionality (protected profiles, the ability to limit who can respond to a post), and local-only posts still haven't been accepted from the main code base. And while features like full text search haven't been implemented yet due to concerns about the possibility of toxicity and abuse, it's not clear that this leads to significant additional safety.

As Mastodon gets broader adoption, it's more and more important to do some deeper analysis about what can be done to prevent or mitigate these threats. This post only scratches the surface, but hopefully gives enough of an idea of the power of the approach that people can take it forward. And I certainly hope that any companies or government agencies adopting Mastodon are putting some resources into looking at these issues as well.

That all sounds pretty abstract, so let's make it concrete by talking about everybody's favorite Mastodon topic: quote boosts!

QTs, QBs, reblogs, wtf?

Quote Toots are coming. The choice is to make them benign, or not to.

– Isabelle Moreton (@epistemophagy)

"Quote boosts", the not-yet-widely-implemented equivalent of Twitter's "quote tweets" and Tumblrs "reblog with comment," are one of the hottest topics on these days on Mastodon, the decentralized open-source social network that's getting a lot of attention these days.

Terminology note: Mastodon's "posts", the equivalent of Twitter's tweets and Tumblr's blogs, were called "toots" until November 2022, so "quote toot" and "quote boost" are synonyms. I'll use "quote boost" and "QB" unless I'm quoting somebody in which case I'll use whatever language they use.

On the one hand there lots of positive uses of quote tweets (QTs) and reblogs. They're useful for helping people understand discussions they don't have all the background for, valuable for helping highlight similar experiences, great for calling out hypocrisy ("This you?"), a core component of Black digital practices including call-and-response patterns, ... the list goes on. On the other hand, they're also used for targeting people for harassment, taking quotes out of context, and spreading disinformation.

Last month's Black Twitter, quoting, and white views of toxicity on Mastodon discusses the topic in more deail. Hilda Bastian's Quote Tweeting: Over 30 Studies Dispel Some Myths on Absolutely Maybe is a detailed look at the research on Twitter, although little if any of it gets down to the level of detail we need to consider to prevent harassment and abuse.1

How to balance the positives and negatives?

For the last five years, Mastodon has tried to avoid the problem by only supporting "boost" (the equivalent of Twitter's "retweet" and Tumblr's "reblog") but not supporting quote boosts (QBs). You can work around this doing a "screenshot-and-link": sharing an image of the post you're quoting along with a link, but it's a lot more work so it introduces friction. Directly supporting QBs will (hopefully) make it easier, reducing friction. That's good for the positive uses, but could increase harassment and abuse. The Appendix in last month's Black Twitter, quoting, and white views of toxicity on Mastodon goes into detail comparing screenshot-and-link to Twitter's QTs including a discusion of friction.

Mastodon forks like fedibird, treehouse, and Hometown already support them – as compatible fediverse applications like Misskey, Calckey, and Friendica have similar constructs. Mastodon BDFL (Benevolent Dictator for Life) has recently said that they're working on QB's so it's probably just a matter of time until they make it into mainline Mastodon in some form or another ... but just what they'll look like when they're implemented is still completely up in the air.

Update, December 2024: In April 2024, Mastodon got funding from NLNet to implement quote posts. Specification and implementation began in late summer, and it's expected to ship in the upcoming release 4.4.

Dogpiling 101

Let's look in more detail at one way that quote boosts could be used as part of harassment: encouraging dogpiling – getting a bunch of accounts to target somebody. Of course, there are plenty of ways to dogpile without quote boosts – Dogpiling, weaponized content warning discourse, and a fig leaf for mundane white supremacy discusses a 2017 episode on Mastodon, and it's happened plenty of times since then. Introducing quoting functionality can potentially make matters worse, which would be bad, and we'll get to that in the next section. First though let's look at dogpiling without quoting.

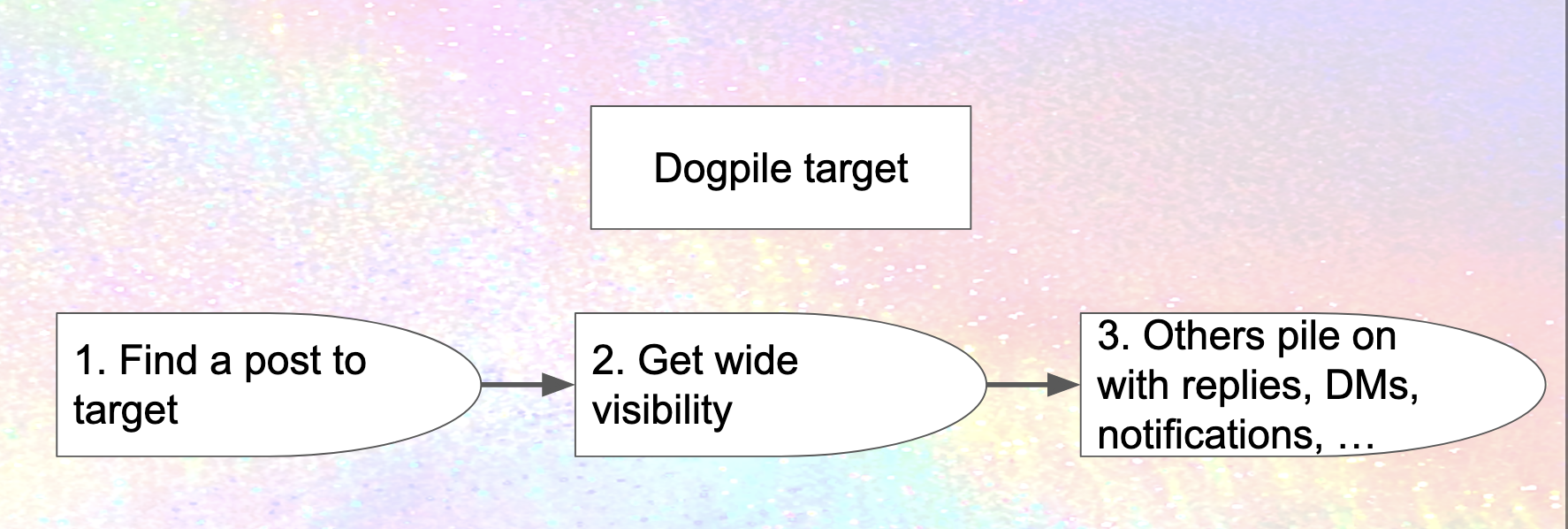

From an attacker's perspective, you can think of dogpiling as a three-step process:

- Find a post from your target to point people to

- Get broad visibility on that post in ways that encourage people to respond hostilely

- Some percentage of the people who see the post do in fact respond hostilely, bombarding the target with nasty replies, direct messages, or notifications

How to prevent this from happening?

Well, if the attacker can't see your posts, then they can't target them; so if you know in advance that somebody's going to attack you, you can block them. That's not always the case though – and even if you block an attacker's account, they can set up another, or enlist a friend to help. Restricting visibility on posts (making them followers-only, for example) provides broader protection. Of course, there's a downside to this: it cuts down your ability to communicate with people who aren't your attackers. Still, even though these defenses aren't perfect, they're quite useful.

Mastodon has support for blocking, both of individual accounts and entire instances. However, support for limiting visibility is a lot weaker than it could be.

- "Followers-only" posts seem like they provide a lot of protection, but unless you've turned on "approve follows", an attacker can just follow you and boom they have access to all your followers-only posts.

- Additional levels of visibility such as"mutual followers" (people who you're following who are also following you) or "trusted accounts" could allow for even more protection.

- Forks like Glitch and Hometown support "local-only posts," which are only visible to people running on the same instance as you; this provides significant protection if you're on a well-moderated instance that doesn't have open enrollments, but unfortunately this functionality isn't available in mainline Mastodon so most users can't take advantage of it.

So even though we haven't gotten to quote boosts yet, we've identified a couple of areas for improvement.

How to get wide visibility on a post?

Next let's about the situation where we haven't been able to prevent the attacker from finding a post, and they want to get wide visibility for it. Even without quote boosts, they've got a whole range of techniques available today:

- Boosting the post

- Replying, and boosting the reply

- Sending a link to the post to people via email, a chat message, a DM on some other social network along with instructions about what to do

Mastodon doesn't currently let people control who can boost or reply to your posts. Once you know that a post is getting a lot of attention, it would be great to lock it down in some way ... but that's not an option The only control you have is the ability to delete the post2 – which might well be exactly what the people who are dogpiling you want. Once again, there's a lot of room for improvement.

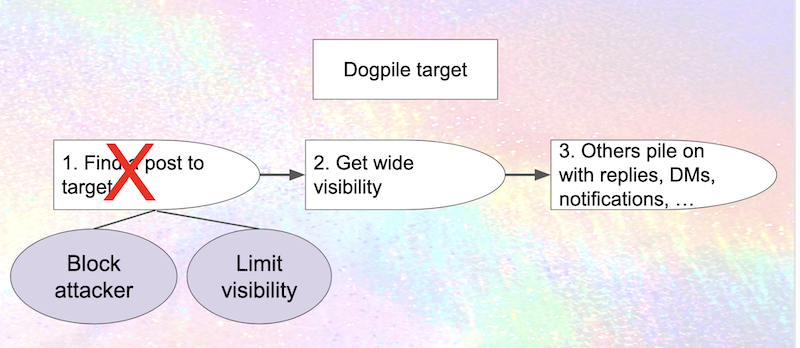

Let's skip over the "get wide visibility" bit for now, and look at the third aspect of the attack. Once the post has broad visibility, are there ways to prevent people from piling on? In principle, yes. Limiting the visibility of the post, or who can reply or send you notifications, cuts down the number of dogpilers. And in the worst case, having a "shields up" mode that locks down your account – the equivalent of Twitter's "protected profile" – insulates you from the dogpiling.

Mastodon does allow you to limit who can send you notifications, but unfortunately none of these other defenses are currently available. Limiting visibility would be very helpful, but (in addition to the limitations discussed above) you can't change the visibility of a post after it's been created.3 Unlike Twitter, Mastodon doesn't have the ability to limit who can reply to a post; and there's no equivalent of Twitter's "protected mode".

Of course, if you like to interact broadly, shifting to protected mode makes your account a lot less useful; and (just like deleting a post), it's giving ground to the attacker. So it's certainly not ideal. Still, this kind of "shields up" functionality is incredibly valuable when you're under attack. Mastodon's instance boundaries could allow for more gradations of "shields up"; for example, limiting access only to people on the same instance – or on a list of "trusted instances" (along the lines of Akkoma's "bubble").

Dogpiling with quoting

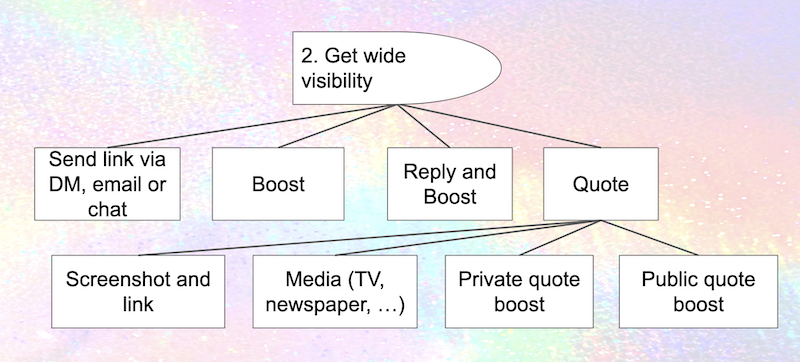

Quoting gives the attacker another technique for getting visibility on a post ... or, more accurately, several additional techniques, because there's more than one way to quote a post. Here's one way to show it visually.

Today, without any support for quote boost functionality, it's still fairly easy to approximate a quote boost by doing a screenshot and sharing it along with a link. It's more work than a quote boost, but from an attacker's perspective it also has the advantage that the person being targeted doesn't get a notification and so doesn't know they're being quoted. Attackers who are journalists (or know journalists) have another powerful option: quote the tweet in a media outlet with broad visibility. Anybody who's been targeted by Fox News knows how devastating this can be.

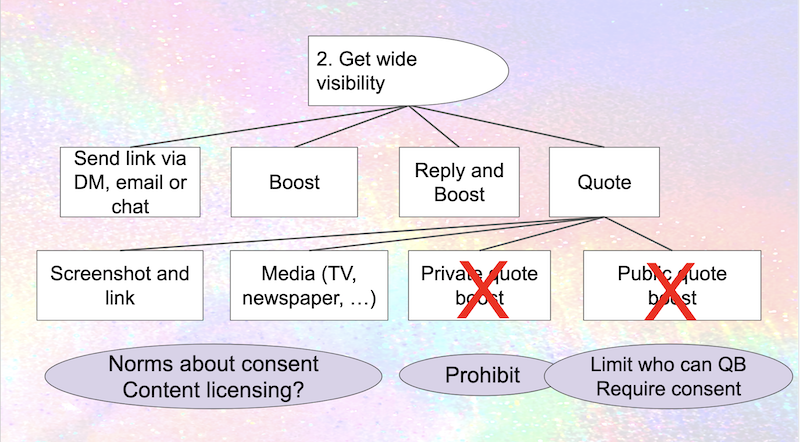

Quote boosts add a couple more options. A private quote boost is one that the target can't see – but the attacker and their friends can. Claire Goforth's Why users want Twitter to ban private quote tweets on the Daily Dot describes the problems this creates. If and when Mastodon implements quote boosts, it should prohibit private quote boosts.

How to limit the impact of public quote boosts? One straightforward and important thing to do is prohibit quote boosts that increase the visibility of a post:.

- If a post is followers-only, it should be impossible to make a public or unlisted quote boost.

- If a post is local-only, it should be impossible to make a quote boost that's not local-only.

- If an account has chosen to try to keep their posts out of search engines, the quote boost should also be withheld from search engines (or quote boosting shouldn't be allowed unless the quoter also keeps their posts from being indexed by search engines).

- Quote boosts by people with huge numbers of followers can significantly increase visibility, so does it make sense to put some limits on that – for example, you can't quote boost a post by somebody who has less than 10% of followers that you do?4

Another possibility is to add a new option to posts saying whether or not it can be QB'ed, along with defaults for public and unlisted posts. Or the implementation could go even further, and require the quoter to ask for consent before quoting a post (or maybe allow people you're following to quote without consent but require it from others).5

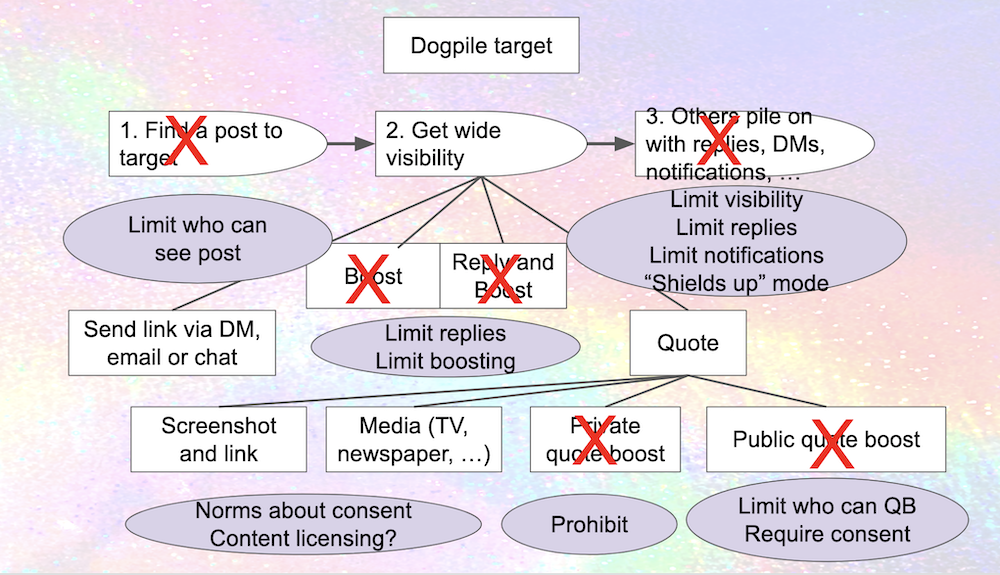

Combining these last several points visually, we get a diagram that looks something like this.

Update, December 2024: Bluesky implemented an additional mitigation I hadn't considered: the ability to detach a quote post. And since Bluesky treats links to posts as quote posts, the mitigation also applies to dogpiling via "screenshot and link".

These limitations could have potential downsides as well. If a politician or some other public figure says something racist or anti-LGBTQ, should they be able to prevent people from quoting it? Mastodon's content warning (CW) norms are often racialized, with people of color getting told they need to put CWs on their personal experiences to avoid making white people uncomfortable. I could certainly picture similar things happening with requiring consent to quote.. For that matter, unless there are limits on who can ask for consent to quote, it's possible that bombarding somebody with consent requests could become a harassment technique in its own right. To be clear, though, these aren't necessarily reasons not to do these things, just factors that need to be considered in any design.

Of course, if people can't use quote boosts to do this kind of quoting, they'll fall back on screenshot-and-link or (if they have access) a media quote. There's no obvious way to prevent these. Norms about consent to be quoted could be somewhat helpful even if attackers ignored them ... once again, though, there are potential downsides. Still, as Leigh Honeywell disucsses in Another Six Weeks: Muting vs. Blocking and the Wolf Whistles of the Internet, a lot of harassment is low-grade, opportunistic, and not terribly persistent. Screenshot-and-link is more effort than a quote boost, so even if it remains an option, reducing abuse possibilities for quote boosts has value.

There's a lot more to learn!

The quick analysis I've done here only scratches the surface. One important limitation of the discussion so far is that it's only focused on what people being dogpiled (or concerned about the risk) can do. Moderators and instance admins can also play a role – helping protect site members who are being dogpiled, taking early action to defuse a dogpiling-in-progress, encouraging norms and seetting defaults that make unintended dogpiling less likely, and so on. How can Mastodon's software, moderator funding and training, and communications channels between admins and moderators better support this work?

For example, when dealing with a sustained dogpiling attack coming from multiple instances, is there a instance-level "shields up" mode limiting interaction to a short list of trusted instances? Or take @cd24@sfba.social's observation in Some thoughts on Quote Boosts that when dealing with screenshot-and-link quoting, "the single most crucial missing feature from the current moderation interfaces is contextualization." Should moderators be able to see what posts on their instance link to a post (whether or not they're quote boosts)?

On the other hand, moderators can also be dogpiled. Back in 2018, Rochko's view of the Battle of Wil Wheaton was that "An admin was overwhelmed with frivolous reports about him and felt forced to exile him." Opinions differ on whether the reports were frivolous (workingdog_'s Twitter thread provides important context ommitted from most mainstream narratives), but there's no question that Mastodon's moderation tools didn't give enough support in this situation; Nolan Lawson's Mastodon and the challenges of abuse in a federated system has some good perspectives. I'm not sure how much tools have evolved since then, but this is another good area to focus on in threat modeling.

And Block Party has support for "helpers," who (with permission) can mute and block on somebody's behalf, as well as other tools to help filter out unwanted Twitter @mentions and use Twitter as normal. How can Mastodon support Block Party or equivalent tools? Are there ways to involve helpers in collective defense at the instance level? What about groups of instances working together?

On a different front ... almost all of the discussion around quote boosts has been in terms of the Twitter experience. What about Tumblr? Tumblr's reblogs put the rebloggers comment at the bottom and provide a chain of reblogs, as opposed to Twitter's QT's where it's at the top and you have to click through to multiple tweets to see the full context. The Mastodon treehouse fork implements QB's that have commentary afterwards (like Tumblr-like) but only includes the first part of the post being quoted. What impact do these and other differences have – and what other variations could be worth exploring?

At an even deeper level, as Erin Sanders describes in A better moderation system is possible for the social web, the ActivityPub that's at the basis of Mastodon and other fediverse software lets anybody send messages to anybody else's inbox by default. As Sanders says, perhaps letting any random person send us a message is not ideal. Could a more consent-based model cut down the risks of dogpiling and other harassment? Sanders' "letters of introduction", and the object capability approaches Christine Lemmer-Webber outlines in OcapPub: Towards networks of consent, are two interesting paths forward here.

As they say in research papers, these are all interesting grounds for future work.

Summing up

After going down into the weeds of quoting, lets take a step back and look some of the key potential mitigations we've identified – for quote boosts and for dogpiling more generally.

- Adding more nuance to post visibility: local-only posts (currently only available in forks), "mutual follows", "trusted followers", "bubble" of trusted instances.

- Make it clear that "follower-only" posts don't provide protection unless you also require approval to follow.

- Changing visibility on posts after they have been made. This is likely to be imperfect for federated posts, but should be robust for local-only posts.

- Adding the ability to limit who can boost a post.

- Adding the ability to limit who can reply to a post.

- Prohibiting quote boosts that increse visibility of followers-only, unlisted, local-only, or non-indexed posts.

- Adding the ability to limit who can quote-boost posts, and perhaps also requiring consent in some cases.

- Adding norms about consent for quoting by journalists and screenshot-and-link and

- "Shields up" modes so that people can protect themselves when an attack is in process: followers-only, local-instance only, a "bubble" of local instances

- Potential improvements in collective response tools, protocols, communications channels, training, and funding for moderators, admins, helpers, and instances

While a couple of these specifically relate to quote boost implementation, the vast majority don't. So at least to me, one big takeaway from this is that quote boosts are only a relatively-small part of the overall dogpiling problem. While it's important to get quote boosts design right, it's even more important to look at the deeper issues – including the many ways Mastodon currently provides less protection than Twitter.

Interestingly, most of these ideas have already been proposed in Github issues and discussions on Mastodon ... but they haven't been prioritized yet. So another big takeaway is that if Mastodon – or any other fediverse implementation – wants to be a significantly safer environment than Twitter, that prioritization needs to change.

The quick analysis I've done here only scratches the surface; there are additional mitigations and dogpiling techniques I didn't cover, and dogpiling's only one of many aspects of harassment to consider. What's really needed is an organized social threat modeling effort – and that's going to require funding.

And as Afsenah Rigot discusses in Design From the Margins, centering the marginalized people directly impacted by design decisions leads to products that are better for everybody.

With social threat modeling, this means working with, listening to, and following the lead of, AND FUNDING:

- marginalized communities who make extensive use of quoting, especially Black Twitter and disability Twitter

- people and communities who are the targets of harassment using quoting along with other tactics, especially women of color, trans and queer people and disabled people

Hopefully that will happen as more large corporations , non-profits, activism groups, and government agencies adopt Mastodon and other compatible platforms.

A funded project would also include budget for some real threat modeling tools ... these diagrams were just done with Google Slides, which is fine for simple stuff but only gets you so far. Still, I figure I'd wrap things up with a diagram that attempts to put it all together.

Image description: At the top, a box saying "dogpile target". Below, boxes with words: "1. Find a post to target" with a red X over it and a circle below saying "Limit who can post"; "2. get wide visibility" with a complex diagram below it; "3. Others pile on with replies, DMs, notifications ..." with a red X over it and a circle below saying "Limit visibility, limit replies, limit notifications, shields up mode".

Below "Get Wide visibility", two boxes side-by-side, both with red X's over them: "Boost" and "Reply and boost". Below those boxes, a circle with "Limit replies; Limit boosting". To the left, a box with "Send link via DM, email, or chat". To the right, a box with "Quote" on it, and a complex diagram below it.

Below the "quote" box, four boxes. On the left, "Screenshot and Link" and "Media(TV, newspaper)" with a circle below saying "Norms about consent. Content licensing?" Next, "Private quote boost" with a big red X, and a circle below it saying "Prohibit." On the right, "Public quote boost" with a big red X, and a circle below saying "Limit who can QB; Require consent."

Notes

1 Almost none of the research Bastian cites looks directly at harassment and abuse, let alone examine potential differences in how different kinds of attackers operate over time, as Twitter's affordances, harassment techniques, and the dynamics of overall weaponization have all changed. While there are papers looking at hate speech, and in particular slurs against disabled people and antisemiticsm none of the cited research appears to look specifically at harassment of trans people, Black women, and other common targets of harassment.

And some significant harassment techniques using quoting are invisible in the data. One obvious example: as we discuss later in the artcle, "private QTs" were a maor Twitter attack vector for a while. Since they're private, they aren't available in any of the data sets researchers have worked with ... so we have no idea how much they are or aren't used in harassment.

Or hypothetically suppose that Twitter's moderation leaves trans people particularly at risk, and/or anti-trans bigots have refined techniques for weaponizing QTs ... which of the 30+ research papers would reflect this?

2 Unfortunately, once a post has federated to other instances, there's no guarantee you can delete it: buggy or incompatible software on other instances might ignore the deletion request, or if the other instances has defederated from yours they might not even see it. I've found posts from instances that no longer exist still available on other instances, and even in Google searches. Thi isn't an issue for local-only posts, another reason they're so important.

3 Supporting this once a post has federated to other instances could be very challenging to implement well (and as with deletion, buggy or icompatible instances might just ignore the change in visibility), but it should be straightforward to implement for local-only posts.

4 Of course, attackers could get around this by having somebody with fewer followers QB and then having the high-profile boosting the QB, so it's not clear how much this would help ... but it's worth considering.

5 And unless the software supports a smooth way to discuss consent, requiring people to ask for consent could lead to a lot of posts cluttering up everybody's timeline. "Can I quote this?" "What part of it are you going to quote?" "The first sentence." etc. etc. etc.

Updates

January 16: add references to A better moderation system is possible for the social web, OcapPub: Towards networks of consent, and protocol improvements.

December 30, 2024: add reference to Bluesky "detach quote post" mitigation and Mastodon getting funding for quote posts