HB 1834 (Age verification / Addictive feeds) followup email and updated written testimony for Appropriations

HB 1834 / SB 5708 is an "AG Request" bill, regulating "addictive feeds"; Attorney General Nick Brown has been making some great Instagram Reels about the need for these bills. Last year, SB 5708 passed the Senate, but didn't get a hearing in the House; HB 1834 made it through Rules, but didn't get a floor vote. Since HB 1834 has a fiscal note attached, it has to go through Appropriations again. We had heard that there was going to be an amended version, so I decided to sign in OTHER to the hearing; but the amendment didn't address the bill's problems, so my written testimony was CON. The exec session was a week later, and during that time I realized there were a couple of ways to improve my written testimony. So when I sent follow-on mail to the committee, I included an updated version of my testimony.

Follow-on mail

Chair Ormsby, Ranking Member Couture, and members of the committee,

I'm Jon Pincus of Bellevue, and I run the Nexus of Privacy newsletter. My position on HB 1834 remains CON. Rather than making kids or teens safer, the age verification requirements in Sections 2(1)(b) and 3(2) put kids, teens and adults at risk. I appreciate the Ranking Member’s efforts to explore different approaches with amendments, but please do not advance this bill.

I’ve attached an updated version of my written testimony, which discusses why the replacement Sections 2(1)(b) and 3(2) language fails to address the underlying issues. This version includes an improved discussion of the dismal results of the Australian Age Assurance Technology Trial (AATT) and their implications, and includes an excerpt from EFF’s Why Isn’t Online Age Verification Just Like Showing Your ID In Person? Here’s the TL;DR summary:

“Protecting kids’ and teens' safety online is incredibly important, but the age verification requirements of this bill put everybody’s privacy at risk – children and teens as well as adults. And the harms of age verification are especially acute to LGBTQIA2S+ people and other marginalized communities. As the coalition letter from 90 civil rights and privacy organizations condemning ID-checking bills (citing effectiveness, censorship, and privacy concerns puts it, "for vulnerable communities, a biometric scan or an ID upload can serve as a huge obstacle, especially for low-income, unhoused, and undocumented people."

While I appreciate the removal of an explicit requirement for age verification in the proposed substitute, the replacement language in Section 2(1)(b) and Section 3(2) ( "the operator has reasonably determined that the user is not a minor") still in practice requires biometric scans and ID uploads. EFF’s June 2025 Letter of Concern to Congress, discussing similar language in a federal bill, goes into detail on this problem, and how “reasonable” language differs from the status quo of “absolute knowledge”. One key point:

“litigation-averse companies are likely to implement some kind of age-verification process to avoid running afoul of the law.”

Child safety is an incredibly important issue, and I applaud the legislature's and AG Brown’s desire to take action here. But age verification, online ID checks, and laws that you know are probably unconstitutional are not the way forward.

Please do not advance HB 1834 or any other bill with age verification or online ID checks. Instead, let's work together over the interim on approaches that really do help keep kids, teens, and adults safer online.

Jon Pincus, Bellevue

Updated written testimony

Chair Ormsby, Ranking Member Couture, and members of the committee,

I'm Jon Pincus of Bellevue, and I run the Nexus of Privacy newsletter. My position on HB 1834 remains CON. This is an updated version of my written testimony from January 26, with an improved discussion of the Australian Age Assurance Trial.

Protecting kids’ and teens' safety online is incredibly important, but the age verification requirements of this bill put everybody’s privacy at risk -- children and teens as well as adults. And the harms of age verification are especially acute to LGBTQIA2S+ people and other marginalized communities. As the coalition letter from 90 civil rights and privacy organizations condemning ID-checking bills (citing effectiveness, censorship, and privacy concerns puts it, "for vulnerable communities, a biometric scan or an ID upload can serve as a huge obstacle, especially for low-income, unhoused, and undocumented people."

While I appreciate the removal of an explicit requirement for age verification in the proposed substitute, the replacement language in Section 2(1)(b) and Section 3(2) ( "the operator has reasonably determined that the user is not a minor") still requires biometric scans and ID uploads. EFF’s June 2025 Letter of Concern to Congress, discussing similar language in a federal bill, goes into detail on this problem, and how “reasonable” language differs from the status quo of “absolute knowledge”. One key point:

“litigation-averse companies are likely to implement some kind of age-verification process to avoid running afoul of the law.”

Note that since Section 8 of the proposed substitute only prohibits operators from using information they've gathered for age estimation in other ways, and doesn't prohibit them from transmitting or selling this data, they actually have a strong incentive to collect and retain additional biometric and ID information. Even if this problem (which as I pointed out in my testimony last year is in earlier versions of the bill) is addressed, however, the risk of over-collection remains.

In addition, the accuracy, equity, and accessibility problems of age estimation technologies mean that ID uploads will be required for many people. Age estimation is frequently wrong, all the more so for Black, Indigenous, Asian, gender-nonconforming people And when an adult is miscategorized as a minor, they’re likely to appeal – at which point they typically need to send in a picture of their government ID.

You've probably heard lobbyists and vendors talk about how accurate today's age systems are, but reality begs to differ. The results of the Australian Age Assurance Technology Trial (AATT) are revealing here.

Australia bans users under 16 from social media, similarly requiring operators to take "reasonable steps". The Age Assurance Technology Trial looked at over 20 different age estimation products, and even in controlled conditions, the results are dismal. The table on Page 51 notes an astonishing 73.26% “false positive rate” for teens one year younger than the threshold, and still-atrocious 59.37% and 38.31% for teens that are within two and three years younger than the threshold respectively. And the fine print notes that this excludes a couple of vendors whose results were so bad they’d have made the numbers even worse!

As a result, the AATT report recommends setting “buffer zones” within a few years of the age threshold, where operators should use “fallback methods” – in other words, uploading an image of a government ID. The AATT report also notes that many alternate methods of age verification have accessibility challenges, so disabled users also need to rely on “fallback methods.”

And even outside the “buffer zones”, there are also significant accuracy problems for older users. The AATT report notes that "Most systems achieve at least 92% True Positive Rate (TPR) at 18+ threshold when estimated age ≥ 19." In other words, it's still wrong for 8% of people who are older than 19.*

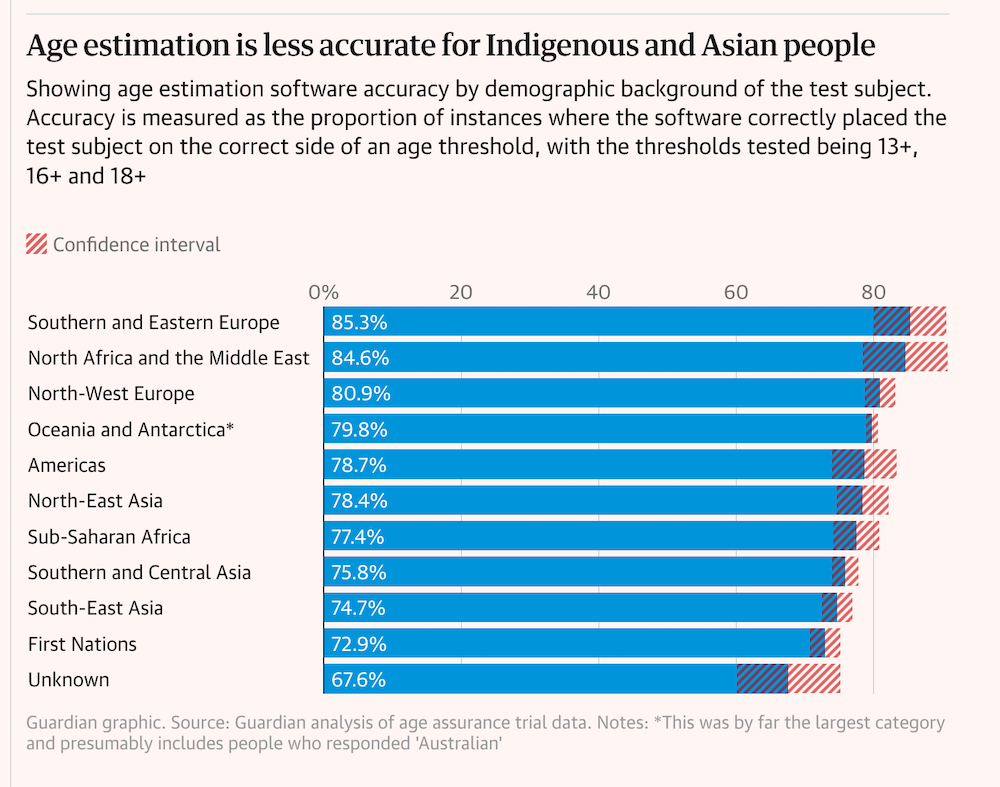

And the AATT report also notes that error rates are higher for "users with darker skin tones". The Guardian's Social media ban trial data reveals racial bias in age checking software, based on additional analysis of the underlying data, has a helpful chart here (on the next page). The report doesn’t include any discussion of biases against trans, binary, or gender-nonconforming people or intersectional analyses Black, Asian, and Indigenous women; error rates are typically higher in all these scenarios.

So a lot of people are going to be miscategorized. And many of them will appeal – which typically requires sending in a picture of their government ID. Discord Breach Sparks Age Verification & Third-Party Privacy Concerns describes a real-world example of this dynamic in the US:.

"the incident was traced to an external customer service vendor who had access to Discord’s support and trust teams. This vendor was responsible for handling user inquiries that included age verification appeals, giving attackers a pathway to sensitive data."

As a result, hackers got access to images of 70,000 government IDs. In the hearing on HB 2112 the lobbyist from the Age Verification Providers Association laughably attempted to dismiss this by claiming that Discord was doing it wrong. If they had only purchased his clients’ software it wouldn't be a problem! But as the frequent discussions of "fallback methods" in the Australian Age Estimation report clearly shows, reality once again begs to differ: many appeals are required for all of today's offerings, and it usually comes down to sharing an image of your government ID.

So a lot of people are going to be miscategorized. And many of them will appeal -- which typically requires sending in a picture of their government ID. Discord Breach Sparks Age Verification & Third-Party Privacy Concerns describes a real-world example of this dynamic in the US:.

"the incident was traced to an external customer service vendor who had access to Discord’s support and trust teams. This vendor was responsible for handling user inquiries that included age verification appeals, giving attackers a pathway to sensitive data."

As a result, hackers got access to images of 70,000 government IDs. In the hearing on HB 2112 the lobbyist from the Age Verification Providers Association laughably attempted to dismiss this by claiming that Discord was doing it wrong. If they had only purchased his clients’ software it wouldn't be a problem! But as the frequent discussions of "fallback methods" in the Australian Age Estimation report clearly shows, reality once again begs to differ: many appeals are required for all of today's offerings, and it usually comes down to sharing an image of your government ID.

A couple more quick notes on accuracy and its impact on privacy. The report does discuss accessibility challenges with many of these tools, highlighting that for users with accessibility needs "Fallback paths (e.g. escalation to ID or retry) were used to mitigate frustration in borderline cases – an important usability safeguard." That's good from a usability standpoint ... but bad from a privacy perspective, since it means that disabled people are more likely to have to upload their ID.

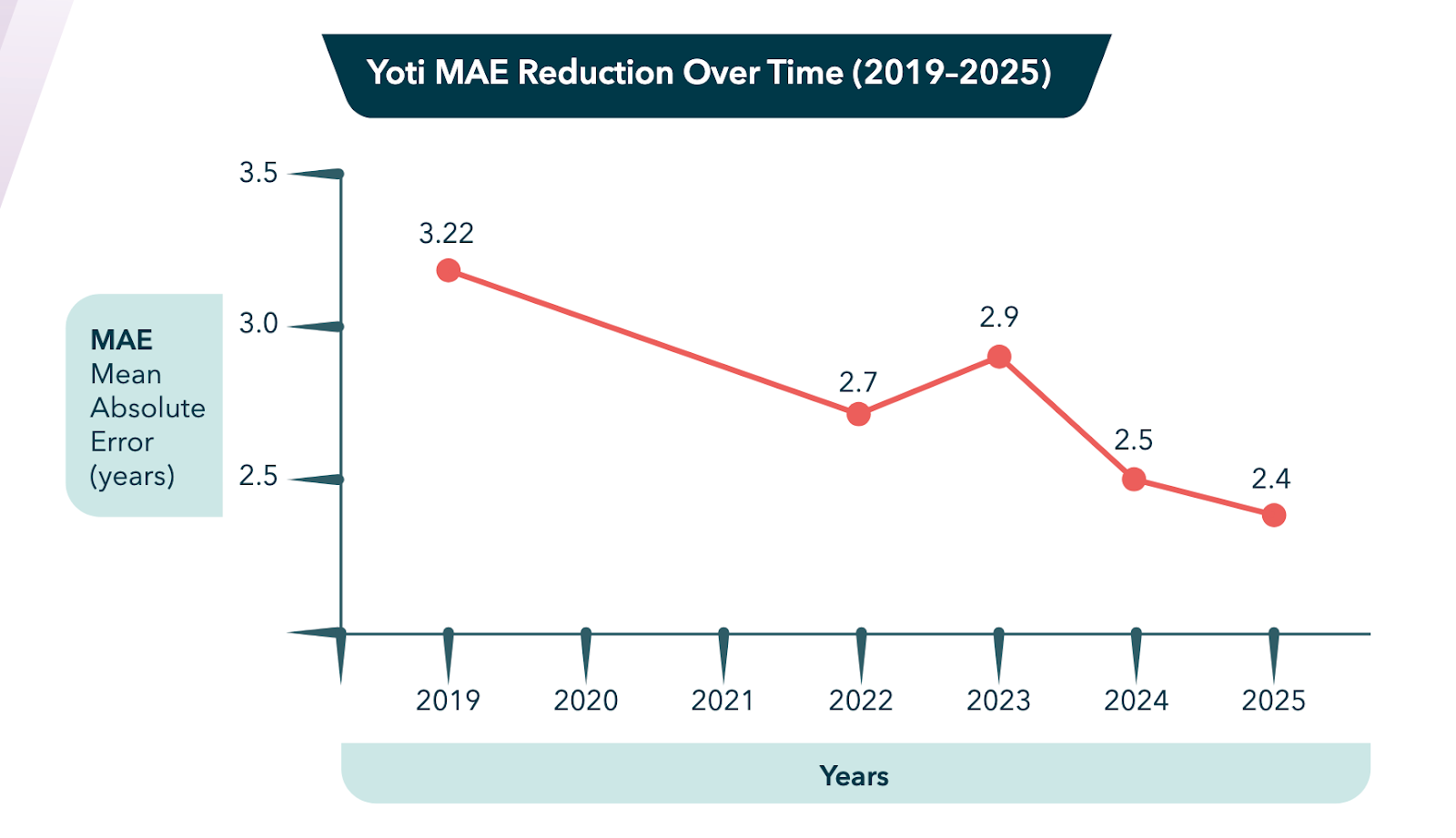

As the AATT report discusses, the technology is improving. It's a point also made by the Age Verification Providers Association lobbyist in the HB 2112 hearing, so presumably you've heard that as well. Figure D.7.1 (p.34) of the AATT report illustrates this improvement for Yoti, one of the vendors in the test, on "mean absolute errors" (MAE), the average number of years the software they tested is wrong by.

A few things to note in this example. First of all, despite the improvements, it's still bad; as of 2025, this system’s estimate was incorrect by an average of 2.4 years (and remember it’s worse for 16-to-20-year olds and people with “darker skin tones.” And despite the clever presentation on the chart, improvement has actually been relatively slow, decreasing an average of .14 MAE/year. And, what's with that blip where it got worse in 2023? Clearly this vendor's quality control wasn't good enough to prevent them from shipping a major regression. As age estimation gets more widely adopted, what are the impacts of these kinds of errors?

Even though it sounds similar to real-world ID checks, online age verification is really very different.. as the Electronic Frontier Foundation discusses in Why Isn’t Online Age Verification Just Like Showing Your ID In Person?

“In offline, in-person scenarios, a customer typically provides their physical ID to a cashier or clerk directly. Oftentimes, customers need only flash their ID for a quick visual check, and no personal information is uploaded to the internet, transferred to a third-party vendor, or stored. Online age-gating, on the other hand, forces users to upload—not just momentarily display—sensitive personal information to a website in order to gain access to age-restricted content.”

So please do not advance HB 1834. And please do not advance any bill with age verification requirements. Instead, let's work together over the interim on approaches that really do help keep kids, teens, and adults safer online.

Jon Pincus, Bellevue

* The AATT report also has interesting data on "mean absolute errors" (MAE), the average number of years the software they tested is wrong by. The best-performing systems had a MAE of 1.3-1.5 years, and the report notes that systems in general were less accurate on 16-20-year-olds. And these numbers are in controlled conditions; the real world is a lot messier, so accuracy is probably even worse.